Abstract

Based on the theory of “snapshot/pullback attractors”, we show that important features of the climate change that we are observing can be understood by imagining many replicas of Earth that are not interacting with each other. Their climate systems evolve in parallel, but not in the same way, although they all obey the same physical laws, in harmony with the chaotic-like nature of the climate dynamics. These parallel climate realizations evolving in time can be considered as members of an ensemble. We argue that the contingency of our Earth’s climate system is characterized by the multiplicity of parallel climate realizations rather than by the variability that we experience in a time series of our observed past. The natural measure of the snapshot attractor enables one to determine averages and other statistical quantifiers of the climate at any instant of time. In this paper, we review the basic idea for climate changes associated with monotonic drifts, and illustrate the large number of possible applications. Examples are given in a low-dimensional model and in numerical climate models of different complexity. We recall that systems undergoing climate change are not ergodic, hence temporal averages are generically not appropriate for the instantaneous characterization of the climate. In particular, teleconnections, i.e. correlated phenomena of remote geographical locations are properly characterized only by correlation coefficients evaluated with respect to the natural measure of a given time instant, and may also change in time. Physics experiments dealing with turbulent-like phenomena in a changing environment are also worth being interpreted in view of the attractor-based ensemble approach. The possibility of the splitting of the snapshot attractor to two branches, near points where the corresponding time-independent system undergoes bifurcation as a function of the changing parameter, is briefly mentioned. This can lead in certain climate-change scenarios to the coexistence of two distinct sub-ensembles representing dramatically different climatic options. The problem of pollutant spreading during climate change is also discussed in the framework of parallel climate realizations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introductory Comments

The traditional theory of chaos in dissipative systems has taught us that on a chaotic attractor there is a plethora of states, all compatible with the single equation of motion of the problem belonging to a fixed set of parameters [1, 2]. The existence of these many different states can be understood in terms of time series. Let us consider a time evolution whose initial position in the phase space lies on the attractor itself, and another one emanated from a nearby point of the attractor. It is well-known that these time series considerably deviate and visit states lying far away from each other on the attractor. The extended size of a chaotic attractor and the sensitivity to initial conditions (unpredictability) are therefore two sides of the same coin. In traditional cases chaotic attractors are low-dimensional and do not depend on time, or can be made time-independent by a properly chosen sampling (stroboscopic mapping).

The dynamics of the atmosphere and the oceans is turbulent, weather, therefore, exhibits chaos-like properties, and is certainly unpredictable [3]. This is also true in the more general context of the climate system [4, 5], which incorporates sea ice and land surface processes including biology for the description of century-scale dynamics. The “chaotic attractor” of the climate system is, however, of very large dimensionality and is not easy to visualize. We are unable to plot this attractor and consider its size as a measure of unpredictability. For a qualitative view of this property, we can accept that there is a multitude of possible states, all governed by the same laws of physics and external forcing. In a stationary case, it is worth speaking of parallel states of the climate. This is a concept that helps in making the term “internal variability” [6] plausible: even if we observe only a single state at a given time instant, many others would also be plausible, simply due to the chaotic nature of the dynamics. It is important to note that the distribution of the parallel states is not arbitrary: an additional property of attractors with chaotic properties is the existence of a unique probability measure, the natural measure [7], which describes the distribution of the permitted states in the phase space [1, 2, 7]. In stationary climate, the external forcing is constant or periodic (with the longest considered period given by the annual cycle), and the dynamical system is autonomous when observed on the same day of the year. A forcing of general time dependence, leads to a changing climate, a fully nonautonomous dynamics. The main message of this work is that climate should then be characterized by a set of permitted “parallel climate realizations”.

The paper is organized as follows. In the next Section we show that a changing climate can be described by an extension of the traditional theory of chaotic attractors: in particular, the theory of snapshot/pullback attractors [8, 9] appears to be an appropriate tool to handle the problem. This necessitates investigating ensembles of “parallel climate realizations” to be discussed in detail in Sect. 3. The aim of the paper is to illustrate the broad range of applicability of this concept in several climate-change-related problems, and show that the underlying ensemble view enables one to explore a number of analogies with statistical physics. Particular emphasis is put on the natural measure on snapshot attractors with respect to which averages and other statistics can be evaluated providing a characterization of the climate at any instant of time. Section 4 is devoted to the illustration of the general ideas in a low-order, conceptual climate model where the time dependent snapshot attractor and its natural measure can be actually visualized, and in a high-dimensional intermediate-complexity climate model where only projections of the attractor can be illustrated, but spatial (geographical) features can also be studied. The remaining part of the paper treats additional problems and concepts. Section 5 is devoted to ergodicity (in the sense of coinciding ensemble and temporal averages) and its breakdown in changing climates, leading to the conclusion that temporal averages over individual time series do not provide an appropriate characterization in generic cases. As a particular example of spatial features, in Sect. 6, we turn to the problem of teleconnections, i.e. correlations between different properties at certain remote geographical locations. Via several examples we illustrate that teleconnections should be handled in the spirit of parallel climate realizations, e.g. by means of appropriately chosen correlation coefficients evaluated with respect to the instantaneous natural measure. A traditional approach based on empirical orthogonal functions is extended for the ensemble framework. The resulting quantities may essentially change in a changing climate. Section 7 shows that the ensemble approach provides an appropriate characterization of any experiments carried out in turbulence-like problems in which the external parameters undergo a temporal change. In problems where one of two coexisting traditional attractors disappears as a function of a changing parameter, the (temporally evolving) snapshot attractor may split into two qualitatively different branches of climate states (see Sect. 8). In this case, tipping is endowed with a probability. Section 9 is devoted to the problem of the dispersion of pollutants under climate change. In Sects. 10 and 11 we discuss open problems and give a short conclusion, respectively. Appendix A offers a didactic illustration of the concept of snapshot/pullback attractors by showing that in the class of dissipative linear differential equations this attractor is nothing but one of the particular solutions of the problem.

2 Changing Climates: Mathematical Tools

Our climate is changing, and it belongs to a class of systems in which parameters (e.g. the atmospheric \(\hbox {CO}_2\) concentration) are drifting monotonically in time. Even in elementary unpredictable models, an appropriate treatment of such a property is not obvious, since traditional methods like periodic orbit theories of chaos (e.g. [1]) cannot be used, as no strictly periodic orbits exist in such systems.

From the 1990s, nevertheless, there has been a progress in the understanding of nonautonomous dynamics subjected to forcings of general time dependence: the concept of snapshot attractors emerged. The first article written by Romeiras et al. [8] drew attention to an interesting difference: a single long “noisy” trajectory traces out a fuzzy shape, while an ensemble of motions starting from many different initial conditions, using the same noise realization along each track, creates a structured fractal pattern at any instant, which changes in time.Footnote 1 With the help of this concept, phenomena that were not interpretable in the traditional view could be explained as snapshot attractors, e.g. a fractal advection pattern in an irregular surface flow which also appeared on the cover of Science [11]. It is worth noting that the concept of snapshot attractors has been used to understand a variety of time-dependent physical systems (see e.g. [12,13,14,15,16,17]) including high-dimensional ones [18, 19], and laboratory experiments [20].

The spread of the concept of snapshot attractor in the field of dynamical systems was augmented by a development in the mathematics literature [21,22,23,24,25], and became accelerated by the fact that, in the context of climate dynamics, Ghil and his colleagues rediscovered and somewhat generalized this concept more than 10 years ago. They used the term pullback attractor [9, 26], and immediately referred to its important role in climate dynamics. It has become clear later [27,28,29] that in the context of climate change, not noisy excitations, rather a continuous drift of parameters (such as increasing \(\hbox {CO}_2\) concentration) is relevant, and therefore deterministic (noise-free) snapshot/pullback attractors capture the essence of the phenomenology of an unpredictable system under these changing conditions.

Qualitatively speaking, a snapshot or pullback attractor can be considered as a unique object of the phase space of a dissipative dynamical system with arbitrary forcing to which an ensemble of trajectories converges within a basin of attraction. A distinction from traditional attractors by means of the adjective snapshot/pullback is useful, since the former ones obey the property that they can be obtained from a single long time series. The equivalence of this “single trajectory” method and the instantaneous observation of an ensemble of trajectories emanating from many initial conditions (ergodic principle) does not hold with arbitrary forcing. One has to choose between the two approaches (see Sect. 5). It is the ensemble approach that is appropriate for a faithful statistical representation of a changing climate. The reason is that the ensemble also represents a natural measure which can be associated to any instant of time. Even if the concept of snapshot and pullback attractors is practically the same, it is useful to remark here that a pullback attractor is defined as an object that exists along the entire time axis (provided the dynamics remains well-defined back to the remote past), while a snapshot attractor is a slice of this at a given, finite instant of time (their union over all time instants thus constitutes the entire pullback attractor). Note, however, that if the dynamics is not defined back to the remote past, then the pullback attractor is also undefined, but from some time after initialization the snapshot attractors can be practically identified. This has to do with the fact that when ensembles are initiated at finite times, they quickly converge to a sequence of snapshot attractors, as demonstrated in Sec. 4.3. It might be illuminating to mention that in linear dissipative systems with time dependent forcings, well-known from elementary calculus classes, the particular solution of the linear system plays the role of the pullback attractor (nonchaotic in this case, as illustrated in Appendix A), which all trajectories converge to.

3 The Theory of Parallel Climate Realizations

In order to make the unusual concept of pullback/snapshot attractors plausible, which might appear too much mathematically-oriented, while the concept of observed time series is widely used, we proposed the term parallel climate realizationsFootnote 2 in [30]. Qualitatively speaking, we imagine many copies of the Earth system moving on different hydrodynamic paths, obeying the same physical laws and being subjected to the same time-dependent set of boundary conditions (forcing), e.g., in terms of irradiation. Parallel climate realizations constitute, in principle, an ensemble of an infinite number of members. At any given time instant, the snapshot taken over the ensemble, the snapshot attractor, represents the plethora of all permitted states in that instant, just like in a stationary climate. However, the ensemble, representing the natural measure of the snapshot attractor, undergoes a change in time due to the time-dependence of the forcing, and, as a consequence, both the “mean state” (average values) and the internal variability of the climate changes with time.

It is remarkable that the idea of parallel climate realizations came up as early as 1978 in a paper by Leith [31]. He also argued about the importance of parallel climate histories and claimed that the instantaneous state of the climate should be described in terms of the ensemble formed by these members, based on an analogy with classical statistical mechanics. He appears to have intuitively understood the central importance of time-dependent probability distributions under changing “external influences”. We feel that Leith should be strongly credited for these early illuminating ideas. However, he did not yet have the necessary methodological tools at hand to explore the full potential of this view and neither did it become widely adopted in the climate community.

The mathematical background discussed in the previous section was the one that has finally turned out to provide a strict and firm foundation for the practical applicability of the vision of parallel climate realizations. A basic element of this formulation is the existence of an instantaneous natural measure on the snapshot attractor which is the real source of the statistics that define climate.

It is worth recalling two essential properties known from chaos theory that remain valid in the snapshot/pullback approach, too:

-

The simultaneous presence of a wide variety of states is an inherent, objective feature of unpredictable systems, which cannot be reduced by external influences (e.g., more accurate measurement, or using a better numerical method). Internal variability is an inherent property of climate, too. This is expressed by the term “irreducible uncertainty” in the climate community [32].

-

These states may accumulate with a higher or lower density in certain regions of the phase space, thereby defining the natural measure on the time-dependent snapshot attractor (for an example, see Fig. 1). One of the important assertions of chaos is that, while following individual trajectories, predictability is lost quickly, chaos can be predicted with arbitrary precision at the level of distributions [1, 2].

The view of parallel climate realizations offers the opportunity of precisely answering classical questions raised by climate research. The “official” consensual assessment of the current climatic situation and its change can be found in the publications of the Intergovernmental Panel on Climate Change (IPCC, [6]). These texts refer to climate at various levels as “average weather”, implying a probabilistic view. Yet, they do not specify the probability distribution according to which the statistical quantifiers should be evaluated. The statistical description may rely on observations of the past, supported by the statement that “the classical period of averaging is 30 years.”Footnote 3 However, if the climate state itself is changing markedly within such a time interval, these averages unavoidably yield statistical artefacts that may be misinterpreted as they mix up events of the recent and more remote past.

The theory of parallel climate realizations claims that averages should be taken with respect to the natural measure\(\mu (t)\)on the snapshot attractor at time t. The average of an observable A over the ensemble of parallel climate realizations is the integral of A with respect to this measure:

The ensemble average in (1) belongs to a given time instant t, and this way temporal averaging can fully be avoided. Averages of observables with respect to the natural measure of the snapshot attractor provide information on the typical behavior, and the time evolution of such averages gives the temporal changes of this behavior.Footnote 4 From the variance or higher order moments about the average the statistics of fluctuations follows, which are different characteristics of the internal variability. We can say that the ensemble of parallel climate realizations is the generalization of the Gibbs distributions known from statistical physics for a non-equilibrium system whose parameters are drifting in time. The micro-states, at a given time instant t, are the permitted states of the climate system (the permitted positions in the phase space), while the macro-state is described by the full natural measure, \(\mu (t)\), including averages etc. taken with respect to this measure.Footnote 5

A general definition of climate change was given in this spirit as early as in 2012 by Bódai and Tél [27] who wrote: “climate change can be seen as the evolution of snapshot attractors”.

We illustrate the advantage of the existence of instantaneous probability measures on the snapshot attractor by the concept of climate sensitivity [34,35,36], which plays a central role in climate science [6] (for a recent review see [37]). In the most elementary formulation, one is interested in a quantity A characterizing climate at a fixed parameter value p and in how much this quantity changes when observed at another parameter value \(p+\varDelta p\). If all other parameters are also fixed, this is called “equilibrium climate sensitivity” [6], and can be obtained by evaluating an average of A over a long time interval. Time-dependence can be introduced in various ways, e.g. involving the parameter p itself (as usually done for \(\hbox {CO}_2\) concentration). We briefly discuss here the case when p is not changing in time, but the change of other parameters are responsible for a non-autonomous dynamics. In this case, we are in a position of defining instantaneous climate sensitivities over the ensemble as follows.

We determine first the ensemble average \(\langle A(t) \rangle _p\) of quantity A at time t with a fixed parameter p, and then the ensemble average with the shifted parameter \(\langle A(t) \rangle _{p+\varDelta p}\) at the same time. Denoting the difference by \(\varDelta \langle A(t) \rangle \), the sensitivity can be defined as

Given the full natural measure, of course, sensitivities beyond that of the average can also be defined, e.g. that of the standard deviation \(\sigma _A(t) =(\langle A^2(t) \rangle -\langle A(t) \rangle ^2)^{1/2}\) of quantity A as

This provides a measure of the change of internal variability under \(\varDelta p\), but any other cumulant can also be taken. One might also think of more advanced definitions, we only mention here the one based on an appropriate distance between the natural measures taken with p and \(p+\varDelta p\) at the same time t. An example of this distance can be the so-called Wasserstein distance [34], the evaluation of which is, however, rather computation-demanding in realistic climate models. As the formulas above suggest, the theory of parallel climate realizations opens the way towards a linear response theory of the climate [31, 35, 38] to be discussed in some detail in Sect. 4.3. Note that a response theory currently exists for infinitesimal changes only; finite-size changes as in (2)–(3) would require theoretical generalization.

After these conceptual considerations, let us briefly discuss the issue of numerical simulations, the application of the theory of parallel climate realizations to projections of the future of the climate, perhaps using cutting-edge Earth system models. Let us imagine that a simulation starts from an initial distribution of a large number N of points in the phase space. The range over which they are distributed is unlikely to lie exactly on the snapshot attractor belonging to the time instant \(t_0\) of initialization. Based on a general feature of dissipative systems (phase space contraction) and chaoticity, initial conditions are, however, forgotten after some time [1, 2]. As a consequence, the ensemble of any initial shape and distribution converges towards the pullback attractor (as schematically illustrated by Fig. 9 of the Appendix, and by Fig. 3). The convergence is found to be typically exponentially fast [29, 39].Footnote 6

After a characteristic convergence time, \(t_{\mathrm {c}}\), the numerical ensemble thus faithfully represents the natural measure of the snapshot/pullback attractor. Statistics of the climate can properly be determined from such numerics, but only for times \(t > t_0+t_{\mathrm {c}}\). The value of the convergence time will be given in the forthcoming examples (and shall be found surprisingly short on the time scales of interest).Footnote 7 In simulations carried out with a sufficiently large number N of ensemble members, the formula (1) of the average is replaced by a much simpler one. Denoting the numerically obtained value of an observable A at time t in the ith member as \(A_i(t)\), the average is an arithmetic mean:

Higher-order moments and multivariate quantifiers (e.g. correlation coefficients) can be determined analogously.

Although such a numerics formally provides N different time series, chaos theory teaches us that their individual meaning is lost after the predictability time (which might be on the order of \(t_{\mathrm {c}}\)). Individual time series are thus on the long run unavoidably ill-defined, and non-representative. It is only their union, the ensemble tracing out the snapshot/pullback attractor and the corresponding natural measure \(\mu (t)\) upon it, that carries reliable content.

4 Illustration of Parallel Climate Realizations

4.1 Illustration in a Conceptual Model

Since an explicit illustration of the snapshot attractors’ properties can be acquired in low-order models only, we turn to such an example. Lorenz formulated an elementary model of the mid-latitude atmosphere in just three first order differential equations [41]. He assigned a meteorological meaning to each variable: x represents the instantaneous average wind speed of the westerlies over one of the hemispheres, and y and z are the amplitudes of two modes of heat transfer towards the polar region via cyclonic activity. The forcing of the system stems, of course, from the insolation, specified by a quantity called F. This is proportional to the temperature contrast between the equator and the pole (and can also be related to the average carbon dioxide content of the atmosphere). The model has been studied in many papers (see e.g. [42,43,44]). The time unit corresponds to 5 days, and therefore it was natural for Lorenz to introduce seasonal oscillations by making F sinusoidally time-dependent with a period of \(T = 73 \, \text {time units} = 365 \, \mathrm {days}\) about a central value of \(F_0\) [45].

In today’s climate change, global warming is primarily due to the warming of the poles [46, 47], which means that the temperature contrast decreases in time. This can be taken into account in the Lorenz84 model by making the central \(F_0\) time-dependent [27]: we can write \(F_0(t)\) instead of \(F_0\). The time axis is chosen such that climate change sets in at \(t = 0\), and afterwards, for the sake of simplicity, \(F_0\) decreases linearly for 150 years, as shown in the last panel of Fig. 1.

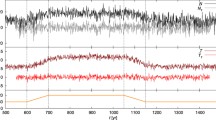

Parallel climate realizations of this model are produced by taking \(N = 10^6\) randomly distributed initial points in a large cuboid of the phase space \((x, \ y, \ z)\) at \(t_0 = -\,250\,\mathrm {years}\), and by following the numerical trajectories emanating from them. The convergence to the attractor is found to occur in 5 years with at least an accuracy of one thousandth, so the convergence time is \(t_{\mathrm {c}} = 5\,\mathrm {years}\). We follow the N trajectories until time t when the simulation is stopped. By plotting the points of the ensemble at time \(t > t_0 + t_{\mathrm {c}}\) on a fine grid we obtain the snapshot attractor at t along with its natural measure. This is illustrated in the first panels of Fig. 1, where, for the sake of clarity, only (x, y) values to which \(z=0\) belongs (with \({\dot{z}} > 0\)) are plotted. The natural measure obtained shows how often the states represented by each pixel are occurring in the ensemble of parallel climate realizations. Figure 1 illustrates that this distribution might be rather irregular above its support, the snapshot attractor. Comparing different time instants t reveals that both the attractor and its natural measure change, and undergo quite substantial changes on the time scale of decades.

The numerically determined natural measure of the ensemble of parallel climate realization in the Lorenz84 model above the \((x,y,z=0)\), \({\dot{z}}>0\) plane in the 25th, 50th, and 88th years after the onset of climate change (December 22 of each year) in panels a–c, respectively. Results are given in bins whose linear size is chosen to be 0.01 in both directions. The support of the natural measure is the snapshot attractor belonging to these time instants. The last panel shows a single time series of x in one member of the ensemble (thin magenta line) and the ensemble average \(\langle x(t)\rangle \) (thick black line). The forcing scenario, i.e., the time dependence of the annual mean temperature contrast \(F_0\) is pictorially represented in the lower part of panel d (Color figure online)

Note that the size of the snapshot attractor is bounded, very high x or y values cannot occur. In other words, there are regions of the (x, y) plane at any time instant that correspond to forbidden states.

The fact that the convergence time \(t_{\mathrm {c}}\) is 5 years implies that the distributions of Fig. 1 are objective (do not depend on the details of their generation, since \(t > t_0 + t_{\mathrm {c}}\)). Averages taken with respect to the snapshot natural measure are thus also objective. Panel d) of Fig. 1 exhibits the ensemble average \(\langle x(t)\rangle \) of variable x (the strength of the westerlies) as a thick black line. This can be interpreted as the typical wind speed of westerlies over the parallel climate realizations. One sees indeed that this is constant up to the onset of the climate change at \(t=0\), after which a slightly increasing trend occurs with strong irregular vacillations superimposed on it.Footnote 8

The magenta curve is a single time series of x from one of the ensemble members. The vacillations here are so strong that hardly any trend can be extracted, but, as discussed earlier, such time series are ill-defined due to the unpredictability of the dynamics.

4.2 Ensemble View in Other Conceptual Models

Natural measures of snapshot attractors (also called sample measures [26]) were generated and investigated in several papers. Knowing them enables one to determine different statistical measures, or the aforementioned climate sensitivity [35], as well as, extreme value statistics [28].

Early publications focused on noisy systems, where the ensemble was based on a fixed realization of the random process. Chekroun et al. [26] determined the sample measure of intricate distribution and time dependence in the noisy Lorenz attractor, and in a stochastic model of El Niño–Southern Oscillation (ENSO), Bódai et al. [48, 49] studied the measure on the snapshot attractors arising in the context of noise-induced chaos. The Lorenz84 model subjected to chaotic signals, white and colored noise was investigated by Bódai et al. [28].

Special attention was devoted to periodically forced low-order climate models without external noise. An investigation of the Lorenz84 model with seasonal forcing [45] was carried out by Bódai et al. [27] from the point of view of an ensemble approach and led to the conclusion that the snapshot attractor of the forced system appears to be chaotic in spite of the fact that in extended regions of the forcing parameter F of the time-independent system the attractors are periodic. It is the underlying transient chaos what the periodic forcing converts into permanent chaos. Daron and Stainforth [50] investigated the Lorenz84 model coupled to an ocean box model under seasonal forcing. They compared the distributions based on the instantaneous snapshot attractors and an ensemble of finite-time statistics (30-year averages), and analysed the ensemble-size-dependence of the finite-size estimates. Both taking temporal statistics and a finite ensemble size have been pointed out there to yield biases in general; and the authors suggested that “ensembles of several hundred members may be required to characterize a model’s climate”. Daron and Stainforth [51] provided further evidence of the biases and discrepancies between temporal and ensemble-wise statistics, and demonstrated the initial-condition-dependence of “transient” convergence properties of ensembles. Pierini [52] and Pierini et al. [53] considered a periodically forced reduced double-gyre model described by four variables and determined sample measures, while Chekroun et al. [54] studied the pullback attractor and its measure in a delay differential model of ENSO.

The effect of a kind of temporally quasi-periodic forcing was investigated by Daron and Stainforth [51] who considered the classical Lorenz model as a model climate in which one of the parameters was made time-dependent.

It should be noted that periodically forced systems constitute a rather special case of the pullback/snapshot approach since in this class the use of this approach can be avoided. When such cases are considered on a stroboscopic map taken with the period of the driving, the dynamics turns out to be autonomous. Attractors in such systems are traditionally called [1] attractors without the adjective pullback/snapshot. These attactors are in fact ergodic as the classical paper by Eckmann and Ruelle [7] claims.

It appears to be worth reserving the term pullback/snapshot attractor for nonperiodically time-dependent systems since these are not ergodic as we shall see in Sect. 5. In this sense, perhaps the first sample measures determined for a deterministic pullback/snapshot attractors is the one found in [28] for the Lorenz84 model forced with the x component of the dynamics on the celebrated Lorenz attractor. Cases forced with other irregularly recurrent signals are treated in [40, 55] and in the last section of [53]. An interesting discovery of the previous paper is the identification of two disjoint basin boundaries, designating the coexistence of two pullback attractors (a chaotic and a nonchaotic one). The effect of a forcing related to a monotonic deterministic parameter drift was first investigated by Drótos et al. [29] in the context of the Lorenz84 model, results of which are sampled in Fig. 1, as discussed in the previous subsection.

4.3 Illustration in a General Circulation Model

The question naturally arises of how the aforementioned properties of snapshot/pullback attractors manifest themselves in more realistic climate models. The Planet Simulator (PlaSim) is an intermediate-complexity climate model developed at the University of Hamburg. It is a well-documented [56, 57] open source software, freely accessible.Footnote 9 Due to discretization, the degrees of freedom are on the order of \(10^5\). The temporal development of the output fields, such as temperature, wind in different air layers, or surface pressure and precipitation can be displayed on sequences of geographic maps. Therefore, a single climate realization results in such “movies” of different fields, and parallel climate realizations in several movies. Figure 2 illustrates that the maps corresponding to the same time instant, but taken from two different realizations differ considerably. One can see that, in contrast to the previous conceptual model, it is now possible to investigate also regional or local quantities and spatial correlations between them in the spirit of spatio-temporal chaos research [58].

The number of parallel climate realizations one can choose is, of course, limited by the running time, and in our example an ensemble of \(N=40\) members is used obtained by minor random perturbations of the initial surface pressure field. In this large model, we can only examine the projection of the natural distribution on some of the selected quantities.

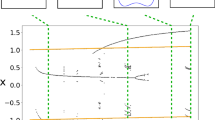

The concentration of carbon dioxide can be prescribed, and the lower part of Figure 3 shows the scenario used for this example. Climate change begins in the 600th year, the concentration doubles in a period of 100 years, and after a plateau the concentration ultimately restores to its initial value in year 1150.

In [59] the local surface temperature T at the geographic location of the Carpathian Basin is determined in all 40 ensemble members until the end of year 1500. These are represented by the thin light gray lines in Fig. 3 to be compared with the ensemble average \(\langle T(t)\rangle \) given in the thick black line. The difference is strong again, individual time series are not representative.

The time evolution of the annual average surface temperature in the Carpathian Basin following the \(\hbox {CO}_2\) scenario (lower panel) is shown by thin gray lines in each member of the Planet Simulator ensemble, and their ensemble average \(\langle T(t)\rangle \) is marked by a thick black line. The initial condition of year \(t = 0\) resulting in a temperature of about \(5\,^{\circ }{\mathrm {C}}\) is forgotten by \(t_{\mathrm {c}} = 150\) years: after this a constant value (approximately 2 \({^{\circ }\mathrm {C}}\)) characterizes the stationary climate up to the onset of climate change in year 600. The ensemble averages in additional simulations launched in years 570 and 1020 (thick red and yellow lines) are also shown. The snapshot attractor is indicated by a dashed black line for t < 150 years. Before this time, the ensemble of simulations does not coincide yet with the snapshot attractor (Color figure online)

It is satisfying to see that within the first 600 years we find a constant average temperature since the climate is stationary. This is seen in the ensemble average only after approximately \(t_{\mathrm {c}}=150\,\mathrm {years}\). The decay leading to this constant is due to the fact that the initial condition was an artificial choice, which fell outside the attractor. Although our simulation started at \(t=0\), the existence of a constant climate can be assumed back in the remote past. In order to emphasize that a pullback attractor exists already at \(t=0\), we extended the black plateau with a dashed black line down to year 0. This helps visualize that the numerical simulation is attracted by an objective entity, the pullback attractor. In our figure this corresponds to the union of the dashed line and the continuous black line for \(t > t_{\mathrm {c}}\). For \(t > t_{\mathrm {c}}\) (\(=150\,\mathrm {years}\)) the average over the numerical ensemble faithfully represents the typical behavior of the surface temperature on the pullback attractor. Its shape is reminiscent of the graph of the \(\hbox {CO}_2\) concentration, occasionally with some delay. As a consequence, slices of the pullback attractor, i.e., the snapshot attractors are time-dependent in intervals when there is a strong \(\hbox {CO}_2\) change. We note again that what we see is the projection of the attractors of the high-dimensional phase space on a single variable, on the temperature of the Carpathian Basin.

As an additional proof of the objective existence of the pullback attractor, we launch additional ensembles of \(N=40\) members at \(t_0'=570\,\mathrm {years}\) and \(t_0''=1020\,\mathrm {years}\) (with the same type of initial condition as at \(t = 0\)). The ensemble averages are represented by the thick red and yellow lines, and it is apparent that they reach the average of the first ensemble simulation (the thick black line) relatively quickly. In addition, the time needed for this is approximately 150 years in both cases, that is, the convergence time \(t_{\mathrm {c}}\) is found to be practically independent of the time of initialization (\(t_0\), \(t_0'\) or \(t_0''\)). We see that whatever time \(t_\mathrm {in}\) an ensemble is initiated, for times \(t > t_\mathrm {in} + t_{\mathrm {c}}\) the ensemble correctly represents the pullback/snapshot attractor, and thus displays the internal variability of the climate.

Concerning a climate projection for year t, Fig. 3 suggests that from the ensemble of realizations a probability distribution of a given quantity, in our example the surface temperature T, can be generated. The probability P(T, t) possesses its mean as the ensemble average \(\langle T(t)\rangle \), has some width, but as our previous discussion on the non-permitted states implies, the support of P is bounded. This means that we can be sure that certain temperatures are impossible (within the particular model). For e.g. \(t=650\,\mathrm {years}\) the permitted temperatures are in the interval (2, 6) \({^{\circ }\mathrm {C}}\) (with \(\langle T\rangle =3.8\)\({^{\circ }\mathrm {C}}\)), we can thus predict that the chance to have a temperature \(T>6\) or \(T<2\)\({^{\circ }\mathrm {C}}\) is practically zero in this year.

The convergence to a “climatological distribution” (i.e. the natural measure on the snapshot attractor in our language) was foreseen by Branstator and Teng in 2010 [60], although neither a clear numerical demonstration, similar to that in Fig. 3, was given, nor a proper justification, referring to the theory of nonautonomous dynamical systems [9], was provided. Beyond reviving Leith’s basic idea about the existence of a time-dependent “climatological distribution” [31], the authors claimed that (i) it is traced out by a remotely-initialized ensemble, that (ii) a probability distribution emanating from nearby initial conditions differs from the “climatological distribution” during its early evolution, but (iii) this difference vanishes after a finite amount of time due to memory loss.Footnote 10

Beyond case-by-case direct numerical simulation for each different forcing scenario of interest, we mention here the application of Ruelle’s response theory [65], whereby one wishes to determine Green’s functions, i.e. the response functions of the system [31, 38], and then predict the response of the natural measure to any forcing scenario. An illustration of this idea in the aforementioned intermediate-complexity model PlaSim, was given by Ragone et al. [35], and an application of the technique to an Earth system model is presented in [66].

4.4 The Boom of “Large Ensembles” in State-of-the-Art Earth System Models

In the recent couple of years a naive interest in so-called “single-model initial-condition large ensembles” for Earth system models (ESMs) has abruptly risen. “Large” means a number of members on the order of 10 or 100 at most, and the attribute “initial-condition” indicates that the members differ in their initial conditions. At this time large ensemble data sets exist for about a dozen of coupled general circulation models or ESMs, and analyses of a few of such data sets have been already reported on.

Most of these ensembles are generated by branching a single run, introducing small or moderate perturbations to some or all model components, to obtain initial conditions for the different ensemble members. With this scheme, called nowadays the “micro” initialisation method [67], it takes some time that the ensemble converges to the natural measure (or, in the language of climate research, full ensemble spread is reached), and the output data have to be excluded from any climatological analysis before convergence becomes complete from some practical point of view. The treatment of various time scales present in the Earth system, including rather long ones, poses a theoretical problem with practical implications, see Sect. 10.2. We believe that a simple and useful approach is to concentrate on variables in the atmosphere and the upper ocean only (above the thermocline), including sea ice complemented with land, and investigate convergence properties in these variables, which have moderate time scales (up to a few decades at most). It may be important to include upper oceanic variables to the assessment of convergence, since the faster time scales of the atmosphere, the sea surface or the land may result in an apparent faster convergence which, however, does not involve layers of the upper ocean.Footnote 11 Earlier convergence might be facilitated by perturbing some oceanic variables besides or instead of atmospheric ones [33, 60], but we emphasize that any initialization scheme suffices in principle (unlike what is suggested by [33]).

Most large ensemble projects that are available to date are reviewed in [69]. With a great vision and effort, a set of these has been made available for public use by the “US CLIVAR Working Group on Large Ensembles” in a centralized data archive,Footnote 12 the content and utility of which is discussed by Deser et al. [67]. In all ensembles that we are aware of, the data belonging to the earliest time does not conform to the natural measure of the attractor but only at later times. The time interval to discard at the beginning of the simulations would ideally need case-by-case specific investigations, but it can generally be estimated to be a few decades. In a few works, concentrating on the atmosphere, the rapidity of atmospheric memory loss is emphasized [70, 71], even though this approach may be insufficient due to the dynamics of the upper ocean as discussed above. Works that pay attention to convergence in the upper ocean or the sea ice (either via memory loss, i.e., independence of initial conditions, or via concentrating on the increase in the ensemble spread) conclude about time scales of around a decade, which they discard before the beginning of their analyses [68, 72,73,74,75]. Unfortunately, several of the more recent works do not pay attention to the need of convergence (e.g. [76,77,78]), which may corrupt analyses that include the time interval of the convergence process.

After convergence takes place, any ensemble represents the natural measure of the model faithfully and can thus be regarded as a set of parallel climate realizations. The only complication may arise from the previously mentioned extremely long time scales in the deep ocean, for which see Sect. 10.2, and which may affect sea level [79] among the more commonly analyzed observables. Deep oceanic variability is sampled only in a single set of ensemble simulations, the Max Planck Institute Grand Ensemble (MPI-GE, [69]), all other ensembles are practically initialized in a single deep oceanic state.

The so-called “macro” initialization scheme in the MPI-GE is qualitatively different from that of the other ensembles. In this case, no perturbation is applied. Instead, an almost 4000-year-long control run, subject to a fixed forcing representing “preindustrial conditions” of 1850, is sampled in its last 2000 years, with the intention of fully sampling variability already at the beginning of the ensemble simulations [69]. The sampling is, however, too frequent for the statistical independence of the ensemble members: the initialization of certain members is separated by no more than 6 years, which is definitely less than the memory of even the upper ocean. Furthermore, constant 1850-conditions correspond to a different attractor than the one that evolves according to the preceding historical forcing including considerable volcanic activity [80]. For these reasons, discarding the first decades is desirable in the MPI-GE as well.

Additional issues about initialization may arise from the insufficient spinup time: drifts corresponding to the convergence process from some state off the attractor in deep oceanic variables may appear and are actually documented in the MPI-GE [69], while their importance is mostly unknown in the other ensembles. Numerical drift, like in CESM-LE [76], may also represent a technical problem.

Even with all the drawbacks and deficiencies, “single-model initial-condition large ensembles” of ESMs are expected to be essential for the determination of the forced response of certain observables, especially those to do with localized climate phenomena, when the relatively strong internal variability masks the possibly weak forced response signal [81,82,83,84,85,86,87,88].

One may also try to utilize the framework of single-model initial condition ensembles, beyond directly describing the complete internal variability of fully coupled global ESM simulations, for characterizing some kind of natural variability specifically associated to some given subsystem of Earth. However, if different subsystems are coupled, quantifying internal variability restricted to only one subsystem is not necessarily a well-defined problem. An example is provided by ensemble studies of the ocean subsystem published recently (see [89] and references therein) in the framework of the OCCIPUT project (OceaniC Chaos - ImPacts, strUcture, predicTability [90, 91]). Their 50-member ocean ensemble is forced by a single realization of the atmospheric dynamics (corresponding to historical instrumental records) and is claimed to represent the “intrinsically oceanic” contribution to the full variability (which includes that of the atmosphere). However, the atmospheric variability is influenced by the ocean in any realistic ESM configuration through a two-way coupling of the modules, and the full variability is described by an ensemble in which both atmospheric and oceanic variables have a nonzero ensemble spread. If one takes the time evolution of the atmospheric variables in different members of such a hypothetical full ensemble, and then constructs an ocean ensemble with each of these atmospheric realizations applied as a fixed forcing, the result will be an extended set of OCCIPUT-type ocean ensembles. Due to the very nature of two-way coupled nonlinear systems, the superposition of these ocean ensembles with prescribed atmospheric forcing are unlikely to be statistically identical to the full ensemble of the two-way coupled configuration. Oceanic processes in the latter will then not be described correctly by the oceanic processes in the individual OCCIPUT-type ocean ensembles in a statistical sense. Note that the inconsistency of the OCCIPUT-type approach (to quantify something called “intrinsic oceanic variability”) with the actual oceanic processes will appear within a single configuration (which can be any arbitrary realistic configuration that involves atmospheric variability). Although OCCIPUT-type ensembles are thus inconsistent with realistic assumptions about the ocean-atmosphere coupling and also with the spirit of parallel climate realizations, they might shed qualitative light to physical mechanisms relevant for inter-decadal oceanic variability, a concept discussed in [92]. For a quantitative description of internal variability if subsystems have substantially different time scales, when two-way coupling can be approximated by one-way coupling, see basic ideas in Sect. 10.2.

In a different approach, the manifestation of the full internal variability of the Earth system on spatial scales unresolved by global ESM simulations may be studied by downscaling such ensemble simulations by regional climate models, see [93] and references therein.

5 Nonergodicity and Its Quantification

The original observation of Romeiras et al. [8], according to which a single long trajectory traces out a pattern different from that of an ensemble stopped at a given instant, implies that ergodicity (in the sense of the coincidence of ensemble and temporal averages) is not met in nonautonomous systems. Traditional chaotic attractors are known to be ergodic [7]: sufficiently long temporal averages coincide with averages taken with respect to sufficiently large ensembles.

Daron and Stainforth [50, 51] have demonstrated discrepancies between temporal and ensemble-based averages, saying that what they call the “kairodic assumption” is not satisfied in nonautonomous systems.

A systematic investigation of such questions was carried out by Drótos et al. [94]. In order to characterize the difference between the two kinds of averages, the term nonergodic mismatch was coined. Consider an observable A. Its time average over a time window of length \(\tau \) (centered at time t) in a single realization is denoted as \(\overline{A_\tau (t)}\). This is compared with the average \(\langle A(t)\rangle \) of the same quantity taken over an asymptotically large ensemble at t. The nonergodic mismatch \(\delta _\tau (t)\) is defined as

and is a quantity that depends not only on the time instant t but also on the window length \(\tau \) over which the temporal average is taken. Ergodicity would be marked by the vanishing of this mismatch for \(\tau \rightarrow \infty \).

The nonergodic mismatch can be evaluated along each single realization of the climate ensemble and depends on the realization. For finite window length \(\tau \), different values of \(\delta _\tau (t)\) are expected, even in ergodic cases, since the window length \(\tau \) is not necessarily sufficient to visit the full phase space. We thus expect a distribution of \(\delta _\tau (t)\) in the ensemble of parallel climate realizations for any t and \(\tau \). From the numerical results a probability density function \(P(\delta _\tau (t))\) can be obtained, called the nonergodic mismatch probability density function (pdf). Here we summarize the results obtained in [94] qualitatively.

In an ergodic case, a stationary climate (panel a of Fig. 4), the distribution remains centered about zero, that is at least the ensemble average of this mismatch is zero. The width of the distribution, the spread, depends on the window length \(\tau \) and decreases with increasing \(\tau \). The decrease is, however, rather slow, decays according to the law of large numbers as \(1/\sqrt{\tau }\). To have a feeling, we mention that in the stationary case of the Lorenz84 model a one-hundredth agreement between the time average and the ensemble average of variable y requires a window length of about \(\tau =10{,}000\,\mathrm {years}\), while a 0.1 degree agreement in the surface temperature data of Fig. 3 of PlaSim can be expected with about \(\tau =200\,\mathrm {years}\).

In the case of a monotonic climate change (panel b of Fig. 4) the pdfs are not centered about zero, moreover, their maximum moves away from zero with increasing \(\tau \). The spread decreases with \(\tau \), and scales as \(1/\sqrt{\tau }\) again.Footnote 13 The fact that the ensemble average of \(\delta _\tau (t)\) increases means that the deviation from ergodicity is the stronger on average the larger the window length \(\tau \) is. It is therefore needless to hope for the validity of ergodicity in a changing climate. This provides an additional argument for avoiding the use of individual time series in climate simulations. Certainly, the quantity \(\langle |\delta _\tau (t)|\rangle \), called the ergodic deficit in [94], can be considered as a measure for the breakdown of ergodicity.

6 Teleconnections: Analyzing Spatial Correlations

Teleconnections are statistical correlations between the states of remote locations of the globe. These amazing links connect regions lying thousands of kilometers from each other and are manifestations of the structured nature of internal variability. One of the most important teleconnections is the relationship of the amount of the precipitation or the average temperature of the Indian summer monsoon with the temperature of the Central Pacific. If the Central Pacific gets warmer, then Southern India becomes wetter and colder.

All traditional methods quantifying teleconnections (evaluating correlations in some way) agree in that they are based on long-term temporal statistics. This naturally implies that these methods, in principle, cannot be used in a changing climate, since they bring certain subjectivity into the investigation (see Sect. 2). Furthermore, investigating the teleconnections through the temporal correlations between a so-called teleconnection index and another variable (e.g., temperature or precipitation) a single correlation coefficient can be obtained. This correlation coefficient characterizes the strength of the relationship for a given time interval, assuming that the climate is stationary for this given period. However, as climate is changing, there is no guarantee that the strength of the teleconnections remain the same.

The application of the parallel climate realizations provides a simple solution for the aforementioned problem, eliminating also any subjectivity. With a sufficiently large number (N) of realizations an ensemble-based instantaneous correlation coefficient can be defined which provides the appropriate characterization of the strength of teleconnections in the spirit of parallel climate realizations.

At a given time instant t, utilizing the whole ensemble, N values of two variables A and B (between which the relationship is studied) are available, and the ensemble-based correlation coefficient is obtained as

where \(\sigma _A(t)\) and \(\sigma _B(t)\) are the ensemble standard deviations of quantity A and B, as defined in relation with Eq. (3). The obtained correlation coefficient characterizes the chosen time instant t indeed. Therefore, during a changing climate the time-dependence of the strength of correlations, i.e., \(r_{A,B}(t)\), can be studied. In the context of teleconnections, one of the variables A and B is chosen typically as a teleconnection index, while the other is some common meteorological variable.

As a first example, we consider the North Atlantic Oscillation (NAO) [95,96,97], which is an oscillation observable in the difference of sea level pressure between the permanent low pressure system over Iceland and the permanent high pressure system over the Azores. Teleconnection indices typically characterize the phase of an oscillation in a meteorological quantity taken at remote locations. In particular, the NAO teleconnection index (NAOI) is based on the difference in the normalized sea level pressure between Iceland and the Azores. Since the pressure difference controls the direction and strength of the westerlies entering into the European region, a high (positive) NAOI, meaning large difference in the sea level pressure between Iceland and the Azores, implies stronger westerlies. These result in higher winter temperature in the Scandinavian region and in the eastern United States, and a lower temperature in the Mediterranean and Greenland [98,99,100]. Analogously, low (negative) NAOI goes together with above-than-average precipitation during winter in Scandinavia, and below-than-average precipitation over the Mediterranean.

At a given time instant, it is also possible to compute an instantaneous teleconnection index in the spirit of parallel climate realizations. For the NAO index, NAOI, the definition in the ensemble framework for the ith member reads as

where \(p_{\mathrm {A},i}(t)\) and \(p_{\mathrm {I},i}(t)\) are the sea level pressures in the ith ensemble member at the Azores and Iceland, respectively.

Figure 5 illustrates the time evolution of the correlation coefficient between the NAOI and the Mediterranean winter temperature and precipitation in PlaSim under the \(\hbox {CO}_2\) concentration change scenario given in Fig. 3, computed for a 40-member ensemble of climate realizations. Similarly to what is experienced nowadays, during the plateau of 360 ppm a negative correlation coefficient between the NAOI and both quantities can be found: larger NAOI (due to larger pressure difference between the Azores and Iceland) results in lower temperature and less precipitation in the Mediterranean region. However, as the \(\hbox {CO}_2\) concentration increases, the correlation of the NAOI and the temperature decreases and practically disappears on the plateau of 720 ppm \(\hbox {CO}_2\). We also emphasize that generally different quantities are affected in different ways: the right panel of Fig. 5 demonstrates that, e.g., the strength of the teleconnection with the Mediterranean precipitation does not change at all despite the change in the forcing [30]. We note that the large fluctuations in the curves are due to the relatively low number of climate realizations. The utilization of a larger ensemble would result in smoother curves, as the amplitude of these fluctuations, representing the sampling error of the correlation coefficient for a finite number of ensemble members, monotonically decreases with increasing ensemble size. These examples draw attention to the fact that remarkable changes in the typical strength of the teleconnections can be observed.

The time evolution of the ensemble-based correlation coefficient of the NAOI with the Mediterranean winter (December to February) surface mean temperature T (left) and precipitation P (right) in the PlaSim model. The \(\hbox {CO}_2\) scenario can be seen in Fig. 3

We turn now to a brief discussion of one of the most known teleconnections, the El Niño–Southern Oscillation phenomenon (ENSO). ENSO is manifested in the irregular variation of wind and sea surface temperatures over the tropical Pacific Ocean [92, 101,102,103,104]. ENSO has an impact locally and globally as well. It controls at least the half of the global climate system, therefore it is urgent to study its teleconnections.

One prominent ENSO teleconnection is the relationship with the Indian summer monsoon. In the framework of parallel climate realizations, ENSO indices similar to Eq. (7) can be defined. An increasing strength of the teleconnection between a particular ENSO index and the Indian summer precipitation has been detected in the MPI Grand Ensemble in the 20th century. No strong change was found, however, under future scenarios forced even more strongly either in the MPI Grand Ensemble or in the CESM Large Ensemble [87]. Very recently [88] a similar conclusion has been drawn regarding the observed strengthening of the Pacific Walker circulation, namely, that the forced response signal seen in the CESM-LE is very weak, and the apparent strengthening should be mostly due to internal variability.

Another phenomenon is the Arctic Oscillation (AO) which appears as a ring-like pattern of sea-level pressure anomalies over the Northern Hemisphere centered at the polar region [105, 106]. Since we experience the largest temperature changes in the Arctic region, where the surface temperature increase is twice that of the tropics [46, 47], it is also worth investigating correlations in the context of AO-related phenomena.

The AO phenomenon has the specific feature that its index (AOI) is defined solely by means of EOF (empirical orthogonal function) analysis (also known as principal component analysis in the physics literature) [107]. Traditionally it is also based on time series utilizing long-term statistics. In this context, it is worth emphasizing here that the theory of parallel climate realizations provides an opportunity for an ensemble-type extension of EOF analysis, too. In this spirit a novel, so-called snapshot EOF (SEOF) analysis method has been developed in [108]). It computes the characteristic pattern for the AO, represented by the leading mode of the EOF analysis over the ensemble for any given time instant. Hence it is capable of monitoring both the time dependence of the pattern and that of the AO amplitude. We note that a similar method (called EOF-E) has also been developed recently in Ref. [109], however, it differs from [108] as it washes together the variability of the monthly data and that of the ensemble.

The AOI teleconnection index is computed in this framework as the standardized principal component of the first EOF mode of the SEOF analysis. Particular features of the AO and its related phenomenon have been studied by means of two state-of-the-art climate models (CESM-LE and MPI-GE) in [108]. The results reveal that the AO pattern and the AO-related phenomena, usually treated to be stationary, are actually time-dependent. Furthermore, the AO’s amplitude, i.e., the fluctuation in the sea level pressure field, appears to be enhanced significantly. The AO-related phenomena also show a remarkable shift, especially in wintertime at the Eastern coast of the USA and Northern Europe between 1950 and 2100 in the investigated scenarios.

Finally, we mention that the SEOF method can also be used as a refined characterization of ENSO. Preliminary results [110] suggest that the influence of the ENSO on the Indian Monsoon increases in the 21st century: the anticorrelation becomes stronger, resulting in wetter summers in India in El Niño years. The Arctic and the tropics exhibit considerable connections with each other, therefore, studying ENSO and AO as a coupled system could be an interesting direction of future research.

7 Ensembles in Experiments

“Minimal modeling” of various components of the terrestrial climate system using laboratory-based experiments has a long history stretching back to the mid-20th century [111, 112]. Ever since, measurements investigating rotating, density-driven shallow-layer flows have provided remarkably useful insights to the basic underlying phenomena of atmospheric and ocean circulation, such as cyclogenesis via baroclinic instability [113], time-reversal asymmetry of temperature fluctuations in weather station records [114], excitation of internal gravity waves [115] just to name a few examples. Furthermore, laboratory models of the mid-latitude atmospheric circulation have been applied to test and validate numerical hydrodynamic solvers [116] and meteorological (ensemble) forecast techniques [117].

Such experimental investigations nicely complement research based on numerical general circulation models: the latter can, theoretically, access the full set of parameters but with a limited resolution which hides important subgrid-scale nonlinear phenomena that may affect multiple scales. Experiments, however, provide practically infinite spatio-temporal resolution (making the setting an ‘analogue computer’ in a sense) but are very limited in the number of parameters to be controlled.

It is indeed impossible to scale down the plethora of relevant effects of the actual climate system simultaneously to a typically tabletop-size laboratory tank. Yet, a careful order-of-magnitude analysis of the equations of motion enables experimenters to select the most relevant non-dimensional parameters the setting of which yields an approximate hydrodynamical similarity to the large-scale flows in question. For ‘sideways convective’ rotating flows – such as thermally driven ocean currents or atmospheric circulation cells powered by the Equator-to-pole gradient of solar heat flux – the most important similarity parameter is the thermal Rossby number \(\mathrm {Ro}_T\) which reads as

where g is the gravitational acceleration, \(\alpha \) represents the volumetric thermal expansion coefficient of the medium, \(\varOmega \) is the rotation rate (of the planet or experimental tank), \(\varDelta T\) denotes the lateral temperature contrast, and H and L are the vertical and horizontal scales, respectively.

\(\mathrm {Ro}_T\) is a measure of (geostrophic) turbulence in the system. When \(\mathrm {Ro}_T \ll 1\) holds, the flow is considered quasi-geostrophic, i.e. dominated by the Coriolis force; the planet-scale currents in the atmosphere and the ocean are in this regime, with \(\mathrm {Ro}_T\) values of ca. 0.1 and 0.01, respectively [118]. Typically, the smaller \(\mathrm {Ro}_T\) is, the more (geostrophically) turbulent and more irregular the flow patterns become. Thus, by setting different values of rotation rate \(\varOmega \) and lateral temperature contrast \(\varDelta T\) in a given laboratory tank, qualitatively different flow structures can be encountered.

This observation opens up the opportunity of modeling climate change scenarios experimentally via applying time-dependent thermal boundary conditions \(\varDelta T(t)\) as forcing that are expected to have a similar effect to the \(\hbox {CO}_2\) forcing used in the numerical models. As explained earlier, in the real Earth system the Equator-to-pole temperature difference tends to decrease in parallel to the rise of global mean temperature [46, 47].

An obvious advantage of experimental setups compared to observations of the real Earth system is the opportunity to reproduce experiments. Reproducing them with the same boundary conditions (forcing) most naturally provides an ensemble of different realizations of the same process, which represents the multitude of possibilities permitted by turbulence or chaotic-like phenomena, i.e., parallel realizations of the minimal climate system model.

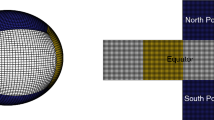

Results from a small ensemble, consisting of nine individual experimental members, of a climate-change-inspired setup described above were reported in [20]. Temperature time series were obtained from a rotating differentially heated annular wave tank mounted on a turntable and rotating about its axis of symmetry (Fig. 6a), yielding \(\mathrm {Ro}_T \sim 0.01\). From each ensemble member 10000s (3300 revolutions of the tank) of temperature data were evaluated, with the onset of ‘climate change’ (hereafter marked as time zero, \(t = 0\)) occurring exactly at half time. In this scenario the lateral temperature contrast \(\varDelta T\) was set to be constant at \(t<0\) followed by an exponential decay \(\varDelta T \sim e^{-t/\tau }\) for \(t>0\) (Fig. 6b).

The experimental set-up used in [20] for the ensemble modeling of mid-latitude atmospheric circulation (a). The dimensions are \(L= 12\) cm, \(H = 4.5\) cm, denoting the horizontal scale and fluid depth, respectively. Time-dependent temperature contrast \(\varDelta T\) was changing as shown in the bottom graph of panel b. (The practically indistinguishable green curves represent the forcing time series from all members and the black curve shows their mean.) The top panel of b shows the ensemble of temperature response in terms of spatially averaged temperature \(\langle T \rangle (t)\) as obtained from three temperature sensors whose locations are marked in panel a. Solid and dotted black curves represent the ensemble mean and the upper- and lower bounds of the instantaneous ensemble standard deviation interval, respectively. Coloring is also added to distinguish between the “stationary” (turquoise) and “changing” (yellow) sections, and one exemplary ensemble member (red), shifted by \(+2.5 ^\circ C\) for visibility. Panel c shows the histograms of ensemble standard deviations about the ensemble mean (determined for a large number of time instants) for the “stationary” (\(t<0\)) and “changing” (\(t > 0\)) sections. The color coding is as in panel b) (Color figure online)

Addressing spatio-temporal variability in the water surface temperature field, ‘traditional’ single-realization measures and their ensemble-based counterparts were compared, e.g. standard deviations, spatial and temporal spectra and characteristic timescales. As an example of a rather typical finding, we briefly discuss the case of standard deviations. When computing running standard deviations within \(501\mathrm {s}\) long windows of records of spatially averaged temperature from the ‘stationary’ (\(t<0\)) and ‘changing’ (\(t>0\)) sections of any given ensemble member (following appropriate detrending) the authors did not find significant differences in the distributions of the obtained running standard deviation values, thus no clear evidence was found that the nature of thermal fluctuations in a changing model climate would differ from that in the stationary part of the same run. However, when the ensemble standard deviations were considered about the ensemble mean (calculated at each time instant t) it was found that the histograms of these values differed markedly in the \(t<0\) and \(t>0\) intervals both in terms of their mean and their skewness (Fig. 6c). This observation can be interpreted as a manifest difference between probability density functions of the (snapshot) attractors corresponding to the ‘stationary’ and ‘changing’ sections of the dynamics.

Besides climate-related aspects it is worth noting that the ensemble approach may be the proper way to conduct fluid dynamics experiments in which non-equilibrium (non-ergodic) processes and turbulence are involved, i.e. phenomena characterized by inherent internal variability. The saying ‘one experiment is no experiment’ is of course probably just as old as physics itself, but what experimenters usually mean by it is the separation of measurement errors (noise) from significant data. However, in non-stationary fluid dynamics problems fluctuations are inherent, even dominant features of the investigated nonlinear processes and the aim of repeated experiments can be the exploration of internal variability. A well-known example – and a research area where ensemble statistics are already widely accepted and used – is the case of laminar-to-turbulent transition in pipe flows [119,120,121]. Perturbing a laminar background flow with a small standardized injection of matter yields the formation of transient turbulent structures (so-called puffs and slugs) with a finite lifespan. This lifespan can vary largely in different experimental runs due to the nonlinear nature of the processes involved. Thus, only an ensemble statistics of these lifespans from a multitude of experiments (that are initiated identically within measurement precision) can provide meaningful information of these interesting intermittent phenomena, as demonstrated in e.g. [121]. In this example, the external parameters are fixed; a particularly wide range of applications of ensemble experiments is expected in the future in cases characterized by drifting parameters.

8 Splitting of the Snapshot Attractor

It is a general feature of multistable dynamical systems [122], where multiple stable asymptotic states coexist in some range of the parameters (or forcing) that a parameter drift may result in drastic changes in the system’s behaviour. It was demonstrated in various studies that a sharp transition between the possible asymptotic states may take place. This behavior is referred to as a tipping transition, or critical transition. In terms of climate dynamics, the stopping of the thermohaline ocean circulation [123] or the occurrence of “snowball Earth events” [124,125,126] are important examples.

Multistability of the Earth’s climate was first found using conceptual, energy balance models (e.g. the one-dimensional diffusive, Ghil–Sellers [127] model), and later in intermediate complexity models, like PlaSim [128]. Multistability comes from the fact that a type of instability appears in these models [129,130,131], which is referred to as a saddle state, or Melancholia state. This unstable equilibrium is embedded in the boundary between the basins of attraction of the two stable climates. Unlike the attracting climate regimes, the Melancholia state is characterized in [130] by a planet that is ice-covered up to the midlatitudes. It was also demonstrated that the Melancholia state plays an important role in noise-induced transitions between the stable climate regimes [132].

In low-dimensional systems that lack any kind of internal variability, Ashwin and coworkers [133, 134] used the framework of pullback attractors to investigate tipping phenomena. They were able to formulate conditions under which tipping may happen. However, not much is known about such transitions in systems with rich internal variability (e.g. the climate system). Since in these systems a single simulated trajectory does not necessarily carry any predictive meaning, we argue that in general, the theory of parallel climate realizations should be applied to these problems, too [135].

An important property of the climate system is that for some range of fixed parameter values, it also allows two coexisting usual (stationary) attractors. The main difference from regular multistability is that both of these attractors are chaotic-like, given the complexity of the system. Let us now consider a scenario during which a parameter S drifts through a region where one of the coexisting chaotic attractors loses stability. After passing the bifurcation point, the parameter S returns abruptly to the initial value. Even when initializing the ensemble entirely inside one of the basins of attraction (that belongs to the initial parameter value), only a fraction of the ensemble may end up on the usual attractor on which the ensemble was started. During the returning part of the parameter drift, at the point when this usual attractor reappears, the snapshot attractor (as an extended object) may overlap with the basin of attraction of both of the coexisting usual attractors. The ensemble’s subset that overlaps with the (time evolving) basin is then captured by the given attractor. Through this mechanism it is possible for snapshot attractors to split into two unconnected parts. Strictly speaking, the snapshot (and the pullback) attractor remains a single object, but for some practical purposes, it is reasonable to treat the two parts as separate entities. In particular, for initializations taking place after the splitting event, they are two snapshot attractors, with their own basin of attraction, since it is no longer possible for trajectories to cross from one part to the other.

Left panel: Schematic diagram in a bistable system showing two coexisting chaotic-like attractors in the projection to an arbitrary variable, A (for visualization), as a function of the stationary parameter value, S. Right panel: Schematic illustration of the time dependence of the variable A over the ensemble subjected to the S(t) parameter drift scenario (shown in the lower part of the panel). Internal variability is represented by the gray band, the variance of the ensemble. The ensemble average is indicated by the thick black curve. The ensemble splits at about year 300. After this time, a red (blue) stripe illustrates the distribution of the realizations ending up on the red (blue) colored usual attractors in the left panel. The average of these subensembles is marked by a dark line (Color figure online)

This phenomenon is depicted schematically in Fig. 7. In the left panel, the two coexisting usual chaotic-like attractors, with internal variability (red and blue colors) are shown by projecting them onto a single variable, A. This may stand for e.g. surface temperature or any other relevant quantity. The right panel illustrates the time dependence of A in an ensemble subjected to a drift in parameter S by the scenario given in the lower panel. The red and blue bands encompass those members of the ensemble that end up on the corresponding chaotic attractors by the end of the scenario. Initially, these coincide and make up a single connected part of the snapshot attractor (grey band). After a certain time the snapshot attractor splits and two unconnected parts (colored red and blue in Fig. 7) appear, and the transition becomes impossible between them.

In this view, it is not useful to talk about a single average/typical behaviour, after the splitting at least. Simply averaging the variable over the full ensemble (the full snapshot attractor) yields a value which is far from any realization, and would not reflect any of the possible states permitted by the system (the average would fall into a forbidden region in the terminology of Sect. 4).

Once the two branches have separated, it makes more sense to consider the two subensembles, and characterizing the corresponding climate regimes by their own parts of the natural measure. For example, one can define an average within each of the separated ensemble’s disjoint components.

The separation of the snapshot attractor to two unconnected branches, between which transition of trajectories is not possible, stems from the fact that the corresponding stationary system is not ergodic in the sense of the existence of a unique global asymptotic probability measure [136]. As a consequence, the time-dependent system remains inergodic [22], even if the ensemble starts within the basin of one of the attractors since the ultimate outcomes are described in practice by two distinct distributions, see Fig. 7. The uniqueness of the full measure associates a well-defined probability of ending up on each climate regime.

The probabilistic nature of the outcomes was investigated in a similarly bistable system, which also contains noise [132]. Lately, the limiting case was also discussed, where the strength of the noise goes to 0, which results in a deterministic system. In this limit it was found that the probabilities associated to ending up on the stable attractors is either 1 or 0, and this can be exchanged between the attractors by tuning a parameter [137].

The mechanism described provides an example applicable to a snowball Earth transition [138] when the realization of tipping might have a nonzero probability in a deterministic system, which is dependent on the particular forcing scenario.

9 Spreading of Pollutants in a Changing Climate