Why America Doesn’t Really Make Solar Panels Anymore

America invented silicon solar cells in the 1950s. It spent more on solar R&D than any other country in the 1980s. It lost its technological advantage anyway.

Every week, our lead climate reporter brings you the big ideas, expert analysis, and vital guidance that will help you flourish on a changing planet. Sign up to get The Weekly Planet, our guide to living through climate change, in your inbox.

You wouldn’t know it today, but the silicon photovoltaic solar cell—the standard, black-and-copper solar panel you can find on suburban rooftops and solar farms—was born and raised in America.

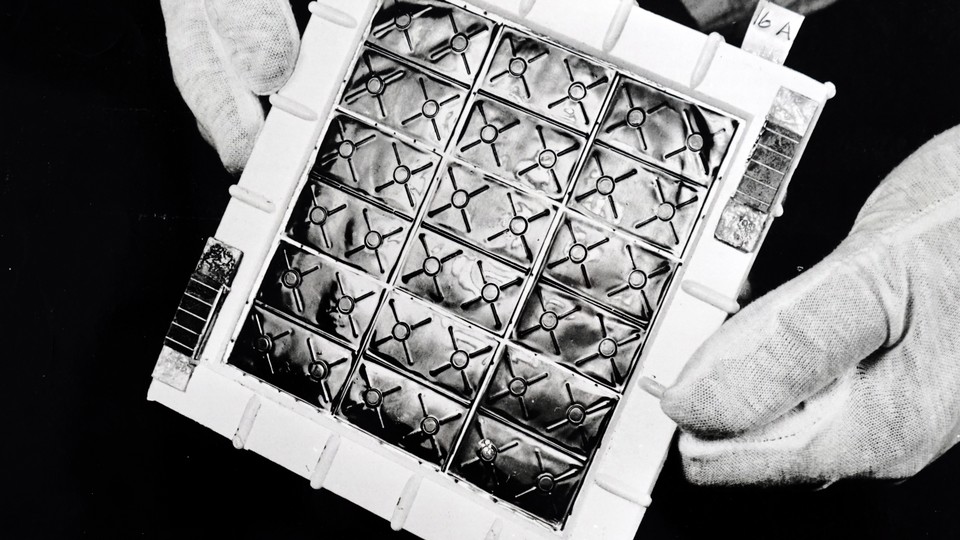

The technology was invented here. In 1954, three American engineers at Bell Labs discovered that electrons flow freely through silicon wafers when they are exposed to sunlight.

It was deployed here. In 1958, the U.S. Navy bolted solar panels to Vanguard 1, the second American satellite in space.

And for a time, it was even made here. In the 1960s and ’70s, American companies dominated the global solar market and registered most solar patents. As late as 1978, American firms commanded 95 percent of the global solar market, according to one study.

The key phrase being “for a time.” Solar panels aren’t really made in the United States anymore, even though the market for them is larger than ever. Starting in the 1980s, leadership in the industry passed to Japan, then to China. Today, only one of the world’s 10 largest makers of solar cells is American.

For the past few decades, this kind of story—of invention, globalization, and deindustrialization—has been part of the background hum of the American economy. Lately, policy makers seem eager to do something about it. Last week, a robust and bipartisan(!) majority in the Senate passed a bill aimed at preserving America’s “technological competitiveness” against China. It will spend more than $100 billion on basic research and development over the next several years.

And as part of his infrastructure proposal, Joe Biden has asked Congress to authorize $35 billion for clean-energy R&D. Observers on the left have painted this figure as pitifully small, pointing out that it’s about equal to what Americans spend on pet food every year.

I sympathize with their concerns. But I’m writing about these proposals because I have a bigger problem with them: I’m not sure R&D is the answer to our problems. Or, at least, I’m not sure the kind of R&D that Congress wants to authorize is the answer to our problems.

Let’s back up. R&D generally refers to spending on research that doesn’t have an obvious or immediate market application. The U.S. leads the world in R&D spending, and has done so for decades, although China is in the No. 2 spot and gaining. R&D might seem like an unfathomably boring topic, akin to arguing about medical data or grant approvals, but it revolves around some of the most profound—and unanswered—questions of industrial civilization: Why do some technologies get developed instead of others? Why do some countries become richer faster than others? How can we materially improve people’s lives as fast as possible—and can the government do anything to help? Above all, where does economic growth come from? This is what we’re fighting about when we fight about R&D.

And this is why I think the history of the solar industry is so important. (The following account is indebted to my reading and talking with Max Jerneck, a researcher at the Stockholm School of Economics who has documented the history of solar energy in the U.S. and Japan.)

In the late 1970s, it was not obvious that the American solar industry was in danger. President Jimmy Carter and Congress had just established the Department of Energy, which promised to develop new energy technologies with the same seriousness that the U.S. devotes to developing new military technologies. Solar engineers saw a bright future. But then a series of changes racked the American economy. The Federal Reserve jacked interest rates up to all-time highs, which made it harder for Americans to obtain car loans, while strengthening the dollar against other currencies, which made it difficult for American exporters to sell goods abroad. Presidents Carter and Ronald Reagan loosened rules against “corporate raiding,” allowing Wall Street traders to force companies to close or spin off part of their business. After 1980, Reagan also weakened federal environmental rules while dismantling the new Department of Energy, removing support for alternative energy sources such as solar power.

American manufacturers had already been struggling to compete with imports from East Asia. Now they foundered. Start-ups shut down; experts left the industry. Corporate raiders forced oil companies, such as Exxon, to sell or close their small solar R&D divisions. The United States, the country that once produced all the world’s solar panels, saw its market share crash. In 1990, U.S. firms produced 32 percent of solar panels worldwide; by 2005, they made only nine percent.

Japan benefited from this sudden abdication. In the 1980s, Japanese, German, and Taiwanese firms bought the patents and divisions sold off by American firms. Whereas Japan had no solar industry to speak of in 1980, it was producing nearly half the world’s solar panels by 2005.

This may seem like the kind of classic tale that Congress is hoping to prevent. Yet R&D had almost nothing to do with the collapse of the U.S. solar industry. From 1980 to 2001, the United States outspent Japan in solar R&D in every year but one. Let me repeat: The U.S. outspent Japan on R&D in every year but one. It lost the technological frontier anyway.

The problem wasn’t then—and isn’t now—America’s lack of R&D spending. It was the set of assumptions that guides how America thinks about developing high technology.

The American system, in the 1980s and today, is designed to produce basic science—research with no immediate obvious application. In the U.S., in the early ’80s, most solar companies were preparing for the predicted mass markets of the future: residential rooftops and grid-scale solar farms. Both required solar panels to get significantly cheaper and more efficient than they were at the time: They required R&D, in other words.

But Japan’s industrial policy—as orchestrated by its powerful Ministry of International Trade and Industry—focused on finding a commercial application for technologies immediately. It also provided consistent, supportive funding for companies that wanted to invest in finding applications. As such, Japanese companies were pressured to incorporate solar panels into products as soon as possible. Within a few years, they had found solar panels’ first major commercial application, putting them inside pocket calculators, wristwatches, and other consumer electronics. Because those devices didn’t require much electricity, they were well served by solar panels as they existed in the 1980s, not as whatever an R&D study said they could notionally become in the future.

And Japan’s willingness to ship fast and imperfectly eventually helped it develop utility-scale solar. As Japanese firms mass produced more solar panels, they got better at it. They learned how to do it cheaply. This “learning by doing” eventually brought down the cost of solar cells more than America’s theoretical R&D ever managed to. More recently, Chinese firms have emulated this technique in order to eat Japan’s share of the global solar industry, Greg Nemet, a public-policy professor at the University of Wisconsin and the author of How Solar Energy Became Cheap, told me.

Zoom out a bit, and you can see a deeper problem with how Americans think about technology. We tend, perhaps counterintuitively, to overintellectualize it. Here’s an example: You have probably lived with a leaky faucet in your home at some point, a sink or shower in which you had to get the cold knob just right to actually shut off the flow of water. How did you learn to turn the knob in just the right way—did you find and read a college textbook on Advanced Leaky-Faucet Studies, or did you just fiddle with the knob until you learned how to make it work? If you had to write down instructions for turning the knob so it didn’t leak, would you be able to do it?

Getting the faucet not to leak is an example of what anthropologists call tacit knowledge, information that is stored in human minds and difficult to explain. High technology requires much more tacit knowledge than the American system usually admits. The understanding of how to mass produce a car or solar panel is not stored in a book or patent filing; it exists in the brains and bodies of workers, foremen, and engineers on the line. That’s why the places where engineers, designers, and workers come together—whether in Detroit, Silicon Valley, or Shenzhen—have always been the fount of progress.

The American R&D system is designed to fix an alleged failure of the free market—that no corporation has an incentive to fund science for science’s sake. To be sure, this approach has brought advances, especially in medicine: The COVID-19 mRNA vaccines drew on years of thankless “pure” R&D. Yet, as the Niskanen Institute scholar Samuel Hammond writes, this distinction—between pure and applied science—is illusory. R&D is useful, but ultimately only organizations deploying technology at a mass scale can actually advance the technological frontier. We don’t need the government to fund more science alone; we need the government to support a thriving industrial sector and incentivize companies to deploy new technology, as Japan’s government does.

The Biden administration seems mindful of some of the problems with investing only in “pure” R&D. The American Jobs Plan proposes spending $20 billion on new “regional innovation hubs” that will unite public and private investment to speed up the development of various energy technologies. It also aims to establish 10 new “pioneer facilities,” large-scale demonstration projects that will work on some of the most challenging applied problems in decarbonizing, such as making zero-carbon steel and concrete. I think those are more promising than throwing more money at R&D per se.

Addressing climate change requires us to get R&D right. The United States is responsible for 11 percent of annual global greenhouse-gas emissions today. Its share has fallen since the 1990s and will keep dwindling. Yet no matter its share of global carbon pollution, it remains the world’s R&D lab and its largest, richest consumer market. One of the best ways that the U.S. can serve the world is to develop technologies here that make decarbonization cheap and easy, then export them abroad. But in order to fulfill that role, it will have to invest in real-world technologies: A flood of patents from university researchers won’t save the world. Engineers, workers, and scientists working together might.

One more short thought about all of this: I realize that it might seem gauche to say that solar panels are an American technology. How can science and technology have a nationality when they are the patrimony of humanity? (Jonas Salk, the inventor of the polio vaccine, when asked who owned the patent for his formula: “Could you patent the sun?”) But to describe solar panels as “American” isn’t to say that only Americans are entitled to use or make them. It’s to note, first, that technologies are developed in specific places, by specific people. We should focus on what kind of places do the most to drive the good kind of technological progress forward. And it’s a nod, second, to a reality that the pandemic made unavoidable: A large, rich, and industrialized market such as the United States (or the European Union) should be able to make enough goods for itself in an emergency. That the U.S. could not produce its own face masks last year, for instance, was absurd. No country should specialize in making every product, of course, but countries are, for now, the basic units of the global economic system, and they should be able to provide high-technological necessities for their residents in an emergency.

Thanks for reading. Subscribe to get The Weekly Planet in your inbox.