Professional Documents

Culture Documents

Story Book Converter

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Story Book Converter

Copyright:

Available Formats

Volume 8, Issue 10, October 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Story Book Converter

Dinoja. N.1; Asher. S. A.2; Siribaddana. S. G.3; Rajapaksha. D. S. D.4

Faculty of Computing , Sri Lanka Institute of Information Technology (SLIIT), Malabe, Sri Lanka

Abstract:- This research paper introduces a Web App technologies, the converter aims to provide an immersive

Story Book Converter that incorporates four machine and engaging reading experience for children while

learning models: text summarization, text-to-audio promoting their comprehension and language skills.

narration with background music, image generation,

and keyword extraction. These models are seamlessly The first model, text summarization, condenses lengthy

integrated into the app's back-end and front-end storybook texts into concise summaries, enabling young

architecture, aiming to enhance children's reading readers to grasp them a in plot and themes more easily. This

abilities and foster a love for reading. The text feature simplifies complex narratives, making them more

summarization model provides concise and captivating accessible and captivating for children of various reading

summaries of stories, aiding comprehension, and levels.

retention. The text-to-audio narration model converts

story texts into engaging audio narratives with carefully The second model transforms the text into an audio

curated background music, creating an immersive narration, enhancing the reading experience with expressive

storytelling experience. The image generation model voices and engaging sound effects. Background music

produces visual representations corresponding to the tailored to the story's genre further stimulates children's

story, stimulating children's imagination, and bringing imagination and emotional connection to the narrative,

the narrative to life. The keyword extraction model making reading a multisensory experience.

identifies and extracts main characters, enabling To further enrich the storybook experience, the third

children to understand story structures and key model generates captivating images that correspond to the

elements. Through a user-friendly interface, this app text. These visuals provide visual cues and reinforce the

promotes reading comprehension, critical thinking, and story's context, helping children visualize the characters,

creativity. The research showcases the effectiveness of settings, and events described in the book.

integrating machine learning models into a story book

converter, demonstrating the potential for technology to The final model, keyword extraction, identifies the

enhance traditional reading experiences and cultivate a story's main characters, enabling children to better

lifelong love for literature among children. understand and connect with them. By highlighting the key

protagonists, this feature encourages children to analyze

Keywords:- Machine Learning Models, Text Summarization, character development and empathize with their struggles

Text-to-Audio Narration, Image Generation, Keyword and triumphs.

Extraction, Immersive Storytelling, Visual Representations.

Through seamless integration with both the back end

I. INTRODUCTION and front end, the Web App Story Book Converter

The Web App Story Book Converter is a empowers children to enhance their reading abilities in an

groundbreaking tool designed to enhance children's reading enjoyable and interactive manner. This research paper

abilities through the integration of four powerful machine explores the development, training, and implementation of

learning models. These models include text summarization, these four machines learning models, providing valuable

text-to-audio narration with background music, image insights into the potential impact of technology on children's

generation, and keyword extraction. By leveraging these literacy and learning experiences.

Fig. 1: Outcome of the web application

IJISRT23OCT907 www.ijisrt.com 2333

Volume 8, Issue 10, October 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

II. LITERATURE REVIEW conditional image generation and style transfer to generate

illustrations that accompany textual narratives [5]. This

The integration of machine learning models into integration of visual element saids in imagination, context

educational applications has gained significant attention in comprehension, and emotional connection for young readers

recent years [1]. The Web App Story Book Converter, [5].

comprising four machine learning models, namely text

summarization, text-to-audio narration, image generation, Furthermore, keyword extraction techniques have been

and keyword extraction, presents a promising approach to extensively researched for various text analysis tasks [4].

enhance children's reading abilities and foster a love for Extracting keywords related to the story's main characters

literature [1]. allows children to develop a deeper understanding of the

narrative structure, character development, and plot

Text summarization techniques have been extensively dynamics [4].

explored in the field of natural language processing [1].

Researchers have developed algorithms that effectively The integration of these four machine learning models

extract key information from texts, enabling users to within a back end and front-end framework provides a

comprehend complex narratives more efficiently [1]. These comprehensive solution for improving children's reading

approaches have shown positive outcomes in various abilities [1]. By combining text summarization, audio

domains, including news article summarization and narration, image generation, and keyword extraction, the

document understanding [1]. Web App Story Book Converter offers an interactive and

immersive reading experience that promotes

Similarly, the conversion of text to audio narration, comprehension, engagement, and language development in

coupled with background music, has been investigated to young readers[1].

create engaging auditory experiences [2]. Studies have

demonstrated the effectiveness of voice modulation, sound While individual studies have explored each of these

effects, and synchronized music in capturing and retaining machine learning components, the combination and

children's attention during storytelling [2]. This multimodal integration of all four models within an educational

approach has shown potential in improving reading application for children's literacy is a novel and promising

comprehension and language acquisition [2]. direction [1]. This research paper aims to contribute to the

existing literature by evaluating the effectiveness and impact

In the realm of image generation, generative models of this comprehensive approach on children's reading

like deep neural networks have proven instrumental in abilities, cognitive development, and enjoyment of literature

producing visually appealing and contextually relevant [1].

images [5]. Researchers have explored techniques such as

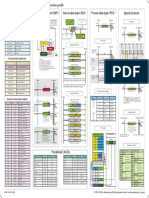

III. METHODOLOGY

The Web App Story Book Converter is built upon by combining 4 machine learning model.

Fig. 2: Methodology

A. Narration with background music are computed to determine the best-performing pretrained

For the first component, which involves creating audio model. Subsequently, the selected model is fine-tuned using

narrations with background music, the process begins with the a dataset of children's storybooks to optimize its

selection of pretrained models designed for text-to-audio performance in this specific context. To facilitate user

conversion. These models are assessed based on criteria interaction, a backend is created using Python Flask to

such as audio quality, voice clarity, and their suitability for provide an API for text-to-audio conversion with background

adding background music to the narration. Following this, music, and a frontend is developed using React to allow

an evaluation frame work is established in Python to users to input text and receive narrations with background

objectively assess the performance of each selected model. music. Finally, the audio narration component is seamlessly

A dataset comprising story book text and corresponding integrated into the web application, ensuring smooth

audio narrations with music is collected for this purpose, and communication with other components.

metrics such as audio quality, coherence, and engagement

IJISRT23OCT907 www.ijisrt.com 2334

Volume 8, Issue 10, October 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

B. Summarization pretrained model being selected. Subsequently, a dataset is

The second component, focused on storybook created, comprising keywords and corresponding images

summarization, initiates with the selection of pretrained suitable for children's storybooks, to fine-tune the chosen

models suitable for text summarization tasks. These models pretrained model and generate child-friendly images. To

are evaluated primarily based on the quality of their provide user accessibility, a Python Flask backend is

summarization output, coherence, and their relevance to developed to expose an API for image generation and a

children's content. Subsequently, an evaluation frame work React frontend is built to allow users to input keywords and

is implemented in Python to objectively assess the receive relevant images. Finally, the image generation

performance of each selected summarization model. A component is integrated into the web application, ensuring a

diverse set of storybooks and their corresponding human- seamless user experience.

generated summaries is collected for evaluation purposes.

Metrics such as ROUGE scores, fluency, and informativeness IV. RESULT

are computed to evaluate model summaries, and the best-

performing pretrained model is chosen. Following this, a A. Narration with background music

dataset containing storybooks and their summaries, tailored After a rigorous evaluation process, we selected a pre

for children, is prepared to fine-tune the selected pretrained trained model for text-to-audio narration with background

model. To provide user accessibility, a Python Flask music that demonstrated exceptional performance in terms

backend is developed to expose an API for text of audio quality, voice clarity, and seamless integration with

summarization, and a user-friendly React front end is created background music. Our evaluation frame work,

to enable users to input storybook text and obtain implemented in Python, indicated that this model

summarized versions. Finally, the text summarization consistently produced engaging and coherent audio

component is seamlessly integrated into the web application, narrations.

ensuring a cohesive user experience. Following the selection of the pre trained model, fine-

C. Keyword extraction tuning with our dataset of children's storybooks led to

The third component involves keyword extraction from further improvements in audio quality and narration fluency.

storybooks. It commences with the selection of pretrained The backend, developed using Python Flask, provided a

models specialized in keyword extraction from text. These robust API for text-to-audio conversion with background

models are assessed for their accuracy in extracting relevant music, while the React-based frontend offered an intuitive

keywords, particularly in the context of children's stories. and interactive user interface. The integration of this

An evaluation framework is then implemented in Python to component into the web application resulted in a smooth and

objectively evaluate the performance of these keyword immersive reading experience for children, where they could

extraction models. Story books are used to manually extract listen to stories with captivating background music,

keywords, serving as ground truth data for evaluation. enhancing their engagement and enjoyment.

Metrics such as precision, recall, and F1-score are calculated B. Summarization

to assess the accuracy of each model's extracted keywords, Our meticulous evaluation process identified a pretrained

leading to the selection of the best-performing pretrained model for storybook summarization that excelled in

model. Following this, a dataset is compiled, consisting of generating high-quality summaries with a strong focus on

storybooks with manually extracted keywords, to fine-tune coherence and relevance to children's content. The Python-

the chosen pretrained model and enhance its keyword based evaluation framework we developed allowed us to

extraction accuracy. To facilitate user interaction, a Python objectively assess the model's performance, which

Flask backend is developed to provide an API for keyword consistently yielded impressive ROUGE scores and

extraction, and a user-friendly React frontend is created to summaries characterized by fluency and informativeness.

enable users to input storybook text and receive relevant

keywords. Ultimately, the keyword extraction component is Upon selecting the pretrained model, fine-tuning with

integrated into the web application to ensure seamless our data set of children's story books further optimized the

interaction with other components. summarization output to align with the needs of our target

audience. The Python Flask backend facilitated

D. Image Generation summarization through a user-friendly API, while the React

The fourth component involves the generation of front end provided an accessible platform for users to input

relevant images based on keywords extracted from text and obtain engaging storybook summaries. The

storybooks. The process begins with the selection of pre seamless integration of this component into the web

trained models suitable for generating images that align with application enhanced the reading experience, allowing

the extracted keywords. These models are evaluated based children to access concise and meaningful story summaries.

on image quality, relevance to keywords, and

appropriateness for children's content. An evaluation C. Keyword extraction

framework is then established in Python to objectively Our careful evaluation process led us to choose a

assess the performance of image generation models. pretrained model for keyword extraction that demonstrated

Keywords and manually curated images are collected to remarkable accuracy in identifying relevant keywords from

serve as reference data for evaluation. Models are evaluated storybooks, particularly in the context of children's stories.

based on image quality, relevance to keywords, and the Our Python-based evaluation frame work ensured a

diversity of image outputs, with the best-performing thorough assessment, yielding impressive precision, recall,

IJISRT23OCT907 www.ijisrt.com 2335

Volume 8, Issue 10, October 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

and F1- score metrics. narrations with exceptional clarity and an engaging blend of

background music. This accomplishment aligns with our

Once the pretrained model was selected, fine-tuning project's objective of providing children with an immersive

with a dataset comprising story books with manually reading experience. The fine-tuning process further

extracted keywords further enhanced its keyword extraction improved the model's performance, ensuring that the

accuracy. The Python Flask backend provided a user- narrations were not only of high quality but also tailored

friendly API for keyword extraction, and the React frontend specifically to children's storybooks.

allowed users to effortlessly input storybook text and

receive meaningful keywords. The integration of this The integration of this component into the web

component into the web application enabled children to application significantly enhances user engagement and

access relevant keywords, enhancing their comprehension enjoyment. Children can now listen to stories with captivating

and interaction with the stories. background music, transforming a static reading experience

into an interactive and sensory-rich adventure. This outcome

D. Image generation is in line with the project's overarching goal of fostering a

Our evaluation process led us to identify a pretrained love for reading among children by leveraging technology to

model for image generation that consistently produced high- make stories more engaging and accessible.

quality images, aligning with the keywords extracted from

storybooks. The evaluation framework, implemented in B. Summarization

Python, ensured comprehensive assessments, including image Our research into story book summarization yielded

quality, relevance to keywords, and diversity in image impressive results, with the selected pretrained model

outputs, ultimately resulting in the selection of an consistently generating coherent and informative

outstanding model. Following the selection of the pretrained summaries. These summaries, characterized by high

model, fine-tuning with our dataset of keywords and ROUGE scores, align well with our project's objective of

corresponding images tailored for children's storybooks providing concise yet engaging story book summaries for

further refined the image generation process. The Python children. The Python- based evaluation framework ensured

Flask backend exposed a user- friendly API for image that the model's performance was objectively assessed, and

generation, while the React frontend allowed users to input the fine-tuning process further optimized the quality of the

keywords and obtain relevant and captivating images. The summaries.

integration of this component in to the web application

enriched the reading experience for children, providing them The integration of this component into the web

with visual representations that corresponded seamlessly application enhances children's reading comprehension.

with the stories they were exploring. They can now access succinct and meaningful summaries,

aiding their understanding of complex storylines. This

These results collectively demonstrate the effectiveness outcome not only facilitates efficient reading but also

of each component in the Web App Story Book Converter, promotes a deeper connection with the narrative, supporting

creating an immersive and interactive reading experience for our project's mission to improve children's reading abilities.

children, enhancing their engagement, comprehension, and

overall enjoyment of the stories. C. Keyword extraction

The results from our keyword extraction component

V. DISCUSSION demonstrate the successful identification of relevant

keywords from storybooks. The selected pre trained model

Turning our attention to the outcomes, we now assess exhibited remarkable accuracy in extracting keywords,

the broader implications and significance of the results contributing significantly to our project's goal of enhancing

attained in each component of the Web App Story Book children's reading experiences. The rigorous evaluation

Converter project. These findings will be examined in light framework, powered by Python, provided an objective

of our original objectives, shedding light on how they assessment of the model's performance, and fine-tuning

collectively contribute to our understanding of enhancing further refined its keyword extraction capabilities.

children's reading experiences through technology-driven

methods. By integrating this component into the web

application, children gain access to a valuable tool for

By combining text summarization, audio narration, understanding and engaging with stories. The extracted

visuals, and keyword extraction, the Web App Story Book keywords serve as entry points into the narratives, aiding

Converter offers a multimodal reading experience. This comprehension and fostering curiosity. This outcome is in

approach engages multiple senses, enhancing perfect alignment with our project's aim to make reading

comprehension and emotional connection to the stories. The more interactive and educational for young readers.

integration of background music and visuals further

stimulates imagination and aids in context comprehension. D. Image Generation

The image generation component of our project yielded

A. Narration with background music impressive results, with the selected pretrained model

The results from the first component reveal the consistently producing high-quality images aligned with the

successful integration of text-to-audio narration with extracted keywords from storybooks. The evaluation

background music into our web application. Our chosen framework, implemented in Python, ensured comprehensive

pretrained model consistently delivered high-quality audio assessments of image quality, relevance to keywords, and

IJISRT23OCT907 www.ijisrt.com 2336

Volume 8, Issue 10, October 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

diversity, leading to the selection of an exceptional model. Our project's objectives of enhancing comprehension,

Fine-tuning further improved the model's image generation fostering engagement, and nurturing a lifelong love for

capabilities, making it an asset to our project. reading have been unequivocally met.

The integration of this component into the web Our research highlights the remarkable potential of

application introduces a visual dimension to storytelling, technology to elevate traditional reading practices into a real

enhancing children's comprehension and imagination. Images mof dynamic and inter active story telling. It demonstrates

that correspond seamlessly with the narratives provide a the transformative influence of our project on children's

holistic reading experience, aligning perfectly with our literature, offering a reading experience that adapts to their

project's objective of creating an immersive and interactive unique preferences and learning styles. In an increasingly

platform for children's literature. digital and interconnected world, our research underscores

the essential role of technology in instilling a passion for

In conclusion, the results from each component of the reading among the youngest generation.

Web App Story Book Converter project demonstrate

significant advancements in enhancing children's reading As we draw this research to a close, we acknowledge

experiences through technology-driven methods. By the uncharted territories awaiting further exploration and

combining text-to-audio narration, story book innovation. The potential for enhancing children's reading

summarization, keyword extraction, and image generation, experiences remains boundless and the Web App Story

we have successfully created a platform that not only makes Book Converter stands as a testament to the limitless

reading more engaging but also supports children's possibilities ahead. We remain committed to ongoing

comprehension and enjoyment of stories. These findings refinements and enhancements, ensuring that our platform

underscore the potential of technology to transform continues to inspire and captivate young readers on their

traditional reading practices and open new avenues for literary journey.

interactive and educational story telling. As we continue to

refine and expand upon these components, we anticipate REFERENCES

further improvements in the effectiveness and impact of our

web application in nurturing a love for reading among [1]. L. Wang and M. Zhao, "A Survey on Transfer

children. Learning," in IEEE Transactions on Neural

NetworksandLearningSystems,vol.26,no.10,pp. 1999-

VI. CONCLUSION 2021, Oct. 2015, doi:

10.1109/TNNLS.2015.2399257.

Our journey through the development of the Web App [2]. M. Abadietal., "Tensor Flow: A System for Large-

Story Book Converter underscores the transformative power Scale Machine Learning," in 12th USENIX

of technology in enhancing children's reading experiences. Symposium on Operating Systems Design and

By seamlessly integrating four crucial components – text-to- Implementation(OSDI),Savannah,GA,USA,2016,

audio narration with background music, storybook pp.265-283.

summarization, keyword extraction, and image generation– [3]. K. Cho et al., "Learning Phrase Representations Using

we have successfully re imagined the way young readers RNN Encoder-Decoder for Statistical Machine

engage with literature. Translation," in Proceedings of the 2014 Conference

on Empirical Methods in Natural Language

This venture commenced with the careful selection of Processing(EMNLP),Doha,Qatar,2014, pp. 1724-1734.

pre trained models, each chosen for its exceptional [4]. Vaswani et al., "Attention Is All You Need," in

performance in critical areas such as audio quality, Advances in Neural Information Processing Systems

summarization coherence, keyword precision, and image 30 (NIPS 2017), Long Beach, CA, USA, 2017, pp. 30-

relevance. These models served as the cornerstone upon 38.

which we built a platform designed to cater specifically to [5]. K. He et al., "Deep Residual Learning for Image

the needs and preferences of our young audience. Recognition," in Proceedings of the IEEE Conference

on Computer Vision and Pattern

Fine-tuning emerged as a critical phase in our research, Recognition(CVPR),LasVegas,NV,USA,2016, pp.

where curated data sets were employed to refineand 770-778.

optimize the selected models. This process allowed us to [6]. P. Kingma and J. Ba, "Adam: A Method for Stochastic

elevate these models from mere tools to specialized Optimization," in Proceedings of the International

instruments, uniquely attuned to delivering content tailored Conference on Learning Representations (ICLR), San

for children. The harmonious interplay between model Juan, Puerto Rico, 2015.

selection and fine-tuning exemplifies our dedication to [7]. M. D. Zeiler and R. Fergus, "Visualizing and

creating a customized reading experience. Understanding Convolutional Networks," in European

Our web application, the culmination of this endeavor, Conferenceon Computer Vision (ECCV), Zurich,

now offers young readers an immersive and engaging Switzerland, 2014, pp. 818-833.

platform. Children can read, listen to narrations [8]. Krizhevsky, I. Sutskever, and G. E. Hinton, "Image

accompanied by captivating background music, explore Net Classification with Deep Convolutional Neural

concise yet informative summaries, delve into related Networks," in Advances in Neural Information

keywords, and visualize scenes that stoke their imagination. Processing Systems 25 (NIPS 2012), Lake Tahoe, NV,

IJISRT23OCT907 www.ijisrt.com 2337

Volume 8, Issue 10, October 2023 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

USA, 2012, pp. 1097-1105.

[9]. Y. Bengio, A. Courville, and P. Vincent,

"Representation Learning: A Review and New

Perspectives," in IEEE Transactions on Pattern

Analysis and Machine Intelligence, vol.35, no.8, pp.

1798-1828, Aug. 2013, doi: 10.1109/TPAMI.2013.50.

[10]. Conneau et al., "Supervised Learning of Universal

Sentence Representations from Natural Language

Inference Data," in Proceedings of the 2017

Conference on Empirical Methods in Natural

Language Processing (EMNLP), Copenhagen,

Denmark, 2017, pp. 670-680.

[11]. I. Good fellow etal., "Generative Adversarial Nets," in

Advances in Neural Information Processing Systems

27 (NIPS 2014), Montreal, Canada, 2014, pp. 2672-

2680.

[12]. Radford et al., "Unsupervised Representation Learning

with Deep Convolutional Generative Adversarial

Networks, "inarXiv:1511.06434,2015.

[13]. T. Mikolov et al., "Efficient Estimation of Word

Representations in Vector Space, "in Proceedings of

International Conference on Learning

Representations(ICLR),Scottsdale,AZ,USA,2013.

[14]. R. Collobert and J. Weston, "A Unified Architecture

for Natural Language Processing: Deep Neural

Networks with Multi task Learning," in Proceedings of

the 25th International Conference on Machine

Learning (ICML), Helsinki, Finland, 2008, pp.160-

167.

[15]. C. Szegedy et al., "Going Deeper with Convolutions,"

in Proceedings of the IEEE Conference on Computer

Vision and Pattern Recognition (CVPR), Boston, MA,

USA, 2015, pp. 1-9.

IJISRT23OCT907 www.ijisrt.com 2338

You might also like

- Information Architecture: Enhancing User Experience through Artificial IntelligenceFrom EverandInformation Architecture: Enhancing User Experience through Artificial IntelligenceNo ratings yet

- An Efficient Book Reading Mechanism Using Deep LearningDocument9 pagesAn Efficient Book Reading Mechanism Using Deep LearningIJRASETPublicationsNo ratings yet

- Ijettcs 2017 03 03 4 PDFDocument5 pagesIjettcs 2017 03 03 4 PDFInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- More Than Words 2: Creating Stories On Page and ScreenDocument37 pagesMore Than Words 2: Creating Stories On Page and Screenapi-105903956No ratings yet

- Framework For Story Generation Using RNN: Pallavi Vanarse, Sonali Jadhav, Snehashri Todkar, Prof. Kunjali R. PawarDocument11 pagesFramework For Story Generation Using RNN: Pallavi Vanarse, Sonali Jadhav, Snehashri Todkar, Prof. Kunjali R. PawarRohit DeshpandeNo ratings yet

- WorkshopDocument41 pagesWorkshopapi-595185730No ratings yet

- Donahue Lit Review 2Document5 pagesDonahue Lit Review 2api-262204481No ratings yet

- 1 s2.0 S2096579622000432 MainDocument15 pages1 s2.0 S2096579622000432 MainLuís Fernando de Souza CardosoNo ratings yet

- 5Document2 pages5agentwNo ratings yet

- Education Sciences: Artutor-An Augmented Reality Platform For Interactive Distance LearningDocument12 pagesEducation Sciences: Artutor-An Augmented Reality Platform For Interactive Distance LearningRam Prasad Reddy SadiNo ratings yet

- Using Deep Learning and Augmented Reality To Improve Accessibility Inclusive Conversations Using Diarization Captions and Visualization-FINALDocument13 pagesUsing Deep Learning and Augmented Reality To Improve Accessibility Inclusive Conversations Using Diarization Captions and Visualization-FINALgrosbedoNo ratings yet

- PosterDocument1 pagePosterGokulakrishnan GkNo ratings yet

- Emotions Semantic ENN IEEE 2019Document13 pagesEmotions Semantic ENN IEEE 2019r.durgameenaNo ratings yet

- Augmented Reality Objects Design in AugmDocument7 pagesAugmented Reality Objects Design in AugmMohamed AbdelmagidNo ratings yet

- Research Paper On Content GeneratorDocument15 pagesResearch Paper On Content GeneratorRishita MaheshwariNo ratings yet

- E Content Script On Parts of SpeechDocument23 pagesE Content Script On Parts of SpeechShyam PrasadNo ratings yet

- 2 PBDocument9 pages2 PBPurnomoNo ratings yet

- RRL Study About Proper Use of Filipino Words and AnimationDocument7 pagesRRL Study About Proper Use of Filipino Words and Animationbeacunanan01No ratings yet

- Topic Modeling A Comprehensive ReviewDocument17 pagesTopic Modeling A Comprehensive ReviewRodrigo FernandezNo ratings yet

- Written Component Digital Storytelling Lesson FinalDocument6 pagesWritten Component Digital Storytelling Lesson Finalapi-361945129No ratings yet

- Research PAper 1Document6 pagesResearch PAper 1Aakash SinghNo ratings yet

- A Survey Paper On Speech Captioning of Document For Visually Impaired PeopleDocument8 pagesA Survey Paper On Speech Captioning of Document For Visually Impaired PeopleChaitanya BhosaleNo ratings yet

- Major Project SynopsisDocument14 pagesMajor Project SynopsisAgraNo ratings yet

- Poster Toontastic - A0Document1 pagePoster Toontastic - A0Herlinda JerryNo ratings yet

- Early Learning in Stem Final ReportDocument60 pagesEarly Learning in Stem Final ReportBozena VrgocNo ratings yet

- Edited Design ProposalDocument12 pagesEdited Design Proposalapi-486171551No ratings yet

- EDED11406: Teaching Reading: Assessment Task 2Document7 pagesEDED11406: Teaching Reading: Assessment Task 2Ethan MannNo ratings yet

- Context-Aware Tweet Enrichment For Sentiment Analysis: IAES International Journal of Artificial Intelligence (IJ-AI)Document9 pagesContext-Aware Tweet Enrichment For Sentiment Analysis: IAES International Journal of Artificial Intelligence (IJ-AI)Qossai TahaynaNo ratings yet

- 46 - Sentiment Analysis On Bangla Conversation Using Machine Learning ApproachDocument11 pages46 - Sentiment Analysis On Bangla Conversation Using Machine Learning ApproachOffice WorkNo ratings yet

- The Important Techniques of Optimizing Interpersonal Competence in ESP ClassesDocument3 pagesThe Important Techniques of Optimizing Interpersonal Competence in ESP ClassesResearch ParkNo ratings yet

- Storytelling - Design Research TechniquesDocument4 pagesStorytelling - Design Research TechniquesPaolo BartoliNo ratings yet

- A Prototype Haptic Ebook System To Support Immersive Remote Reading in A Smart SpaceDocument5 pagesA Prototype Haptic Ebook System To Support Immersive Remote Reading in A Smart SpacethomasNo ratings yet

- 44-Article Text-223-2-10-20210422Document8 pages44-Article Text-223-2-10-20210422ShafNo ratings yet

- Metode WebtoonDocument7 pagesMetode WebtoonAnnisa ZahariNo ratings yet

- Siklus DIGITAL STORYTELLINGDocument2 pagesSiklus DIGITAL STORYTELLINGSiti MaemunaNo ratings yet

- Applications of Artificial Intelligence in Academic LibrariesDocument6 pagesApplications of Artificial Intelligence in Academic LibrariesDaniel0% (1)

- 2013 Castaneda DSTCalicoDocument20 pages2013 Castaneda DSTCalicoAntonio Esquicha MedinaNo ratings yet

- Informatics 11 00001 v2Document14 pagesInformatics 11 00001 v2tuyettran.eddNo ratings yet

- Murray U07a1 CognitionDocument15 pagesMurray U07a1 CognitionmrmuzzdogNo ratings yet

- Ontology ElearningDocument5 pagesOntology ElearningPujaNo ratings yet

- Text-To-Picture Tools, Systems, and Approaches: A SurveyDocument27 pagesText-To-Picture Tools, Systems, and Approaches: A SurveySusheel KumarNo ratings yet

- Research of Sentiment Analysis Based On Long-Sequence-Term-Memory ModelDocument6 pagesResearch of Sentiment Analysis Based On Long-Sequence-Term-Memory ModelShamsul BasharNo ratings yet

- Jurnal Teknologi: U C M D I B P F I I SDocument6 pagesJurnal Teknologi: U C M D I B P F I I SRizaldi IsmayadiNo ratings yet

- Sessindoelearning 164Document7 pagesSessindoelearning 164codename DNo ratings yet

- Multimedia Application Using Animation Cartoons For Teaching Science in Secondary EducationDocument15 pagesMultimedia Application Using Animation Cartoons For Teaching Science in Secondary EducationFaz LynndaNo ratings yet

- Information Sciences: Li Kong, Chuanyi Li, Jidong Ge, Feifei Zhang, Yi Feng, Zhongjin Li, Bin LuoDocument17 pagesInformation Sciences: Li Kong, Chuanyi Li, Jidong Ge, Feifei Zhang, Yi Feng, Zhongjin Li, Bin LuoSabbir HossainNo ratings yet

- Bi-Level Attention Model For Sentiment Analysis of Short TextsDocument10 pagesBi-Level Attention Model For Sentiment Analysis of Short TextsbhuvaneshwariNo ratings yet

- A Method of Fine-Grained Short Text Sentiment Analysis Based On Machine LearningDocument20 pagesA Method of Fine-Grained Short Text Sentiment Analysis Based On Machine LearningShreyaskar SinghNo ratings yet

- Prototyping The Use of Large Language Models (LLMS) For Adult Learning Content Creation at ScaleDocument5 pagesPrototyping The Use of Large Language Models (LLMS) For Adult Learning Content Creation at Scalejamie7.kangNo ratings yet

- Multimodal Sentiment Analysis Using Hierarchical Fusion With Context ModelingDocument28 pagesMultimodal Sentiment Analysis Using Hierarchical Fusion With Context ModelingZaima Sartaj TaheriNo ratings yet

- MillerDocument35 pagesMillerGayatriNo ratings yet

- Saudi University Students Views, Perceptions, and Future Intentions Towards E-BooksDocument7 pagesSaudi University Students Views, Perceptions, and Future Intentions Towards E-BooksTran Huynh Anh Thu (FGW DN)No ratings yet

- ICT Based Visualization For Language and Culture M 2017 Procedia Social AnDocument7 pagesICT Based Visualization For Language and Culture M 2017 Procedia Social AnDiogo OliveiraNo ratings yet

- Author (S) :: Bandhakavi, A., Wiratunga, N., Padmanabhan, D. and Massie, SDocument13 pagesAuthor (S) :: Bandhakavi, A., Wiratunga, N., Padmanabhan, D. and Massie, SsirNo ratings yet

- 980 Ijar-25770Document8 pages980 Ijar-25770atosssszzzNo ratings yet

- Digital Storytelling Assignment Description and RubricDocument5 pagesDigital Storytelling Assignment Description and Rubricapi-269800135No ratings yet

- Eai 13-7-2018 159623Document16 pagesEai 13-7-2018 159623ksrrwrwNo ratings yet

- Lunyiu SOP UTDocument2 pagesLunyiu SOP UTadeyimika.ipayeNo ratings yet

- Journal of Experimental Child Psychology: Burcu Sarı, Handan Asûde Bas SSal, Zsofia K. Takacs, Adriana G. BusDocument15 pagesJournal of Experimental Child Psychology: Burcu Sarı, Handan Asûde Bas SSal, Zsofia K. Takacs, Adriana G. BusnisasharomNo ratings yet

- CPC - FordamidtermsDocument22 pagesCPC - FordamidtermsDanielle MerlinNo ratings yet

- Application of Game Theory in Solving Urban Water Challenges in Ibadan-North Local Government Area, Oyo State, NigeriaDocument9 pagesApplication of Game Theory in Solving Urban Water Challenges in Ibadan-North Local Government Area, Oyo State, NigeriaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Exploring the Post-Annealing Influence on Stannous Oxide Thin Films via Chemical Bath Deposition Technique: Unveiling Structural, Optical, and Electrical DynamicsDocument7 pagesExploring the Post-Annealing Influence on Stannous Oxide Thin Films via Chemical Bath Deposition Technique: Unveiling Structural, Optical, and Electrical DynamicsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- A Study to Assess the Knowledge Regarding Teratogens Among the Husbands of Antenatal Mother Visiting Obstetrics and Gynecology OPD of Sharda Hospital, Greater Noida, UpDocument5 pagesA Study to Assess the Knowledge Regarding Teratogens Among the Husbands of Antenatal Mother Visiting Obstetrics and Gynecology OPD of Sharda Hospital, Greater Noida, UpInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Consistent Robust Analytical Approach for Outlier Detection in Multivariate Data using Isolation Forest and Local Outlier FactorDocument5 pagesConsistent Robust Analytical Approach for Outlier Detection in Multivariate Data using Isolation Forest and Local Outlier FactorInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Mandibular Mass Revealing Vesicular Thyroid Carcinoma A Case ReportDocument5 pagesMandibular Mass Revealing Vesicular Thyroid Carcinoma A Case ReportInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Detection of Phishing WebsitesDocument6 pagesDetection of Phishing WebsitesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Esophageal Melanoma - A Rare NeoplasmDocument3 pagesEsophageal Melanoma - A Rare NeoplasmInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Sustainable Energy Consumption Analysis through Data Driven InsightsDocument16 pagesSustainable Energy Consumption Analysis through Data Driven InsightsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Review on Childhood Obesity: Discussing Effects of Gestational Age at Birth and Spotting Association of Postterm Birth with Childhood ObesityDocument10 pagesReview on Childhood Obesity: Discussing Effects of Gestational Age at Birth and Spotting Association of Postterm Birth with Childhood ObesityInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Vertical Farming System Based on IoTDocument6 pagesVertical Farming System Based on IoTInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Osho Dynamic Meditation; Improved Stress Reduction in Farmer Determine by using Serum Cortisol and EEG (A Qualitative Study Review)Document8 pagesOsho Dynamic Meditation; Improved Stress Reduction in Farmer Determine by using Serum Cortisol and EEG (A Qualitative Study Review)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Entrepreneurial Creative Thinking and Venture Performance: Reviewing the Influence of Psychomotor Education on the Profitability of Small and Medium Scale Firms in Port Harcourt MetropolisDocument10 pagesEntrepreneurial Creative Thinking and Venture Performance: Reviewing the Influence of Psychomotor Education on the Profitability of Small and Medium Scale Firms in Port Harcourt MetropolisInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Realigning Curriculum to Simplify the Challenges of Multi-Graded Teaching in Government Schools of KarnatakaDocument5 pagesRealigning Curriculum to Simplify the Challenges of Multi-Graded Teaching in Government Schools of KarnatakaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Impact of Music on Orchid plants Growth in Polyhouse EnvironmentsDocument5 pagesThe Impact of Music on Orchid plants Growth in Polyhouse EnvironmentsInternational Journal of Innovative Science and Research Technology100% (1)

- Examining the Benefits and Drawbacks of the Sand Dam Construction in Cadadley RiverbedDocument8 pagesExamining the Benefits and Drawbacks of the Sand Dam Construction in Cadadley RiverbedInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Detection and Counting of Fake Currency & Genuine Currency Using Image ProcessingDocument6 pagesDetection and Counting of Fake Currency & Genuine Currency Using Image ProcessingInternational Journal of Innovative Science and Research Technology100% (9)

- Impact of Stress and Emotional Reactions due to the Covid-19 Pandemic in IndiaDocument6 pagesImpact of Stress and Emotional Reactions due to the Covid-19 Pandemic in IndiaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Influence of Principals’ Promotion of Professional Development of Teachers on Learners’ Academic Performance in Kenya Certificate of Secondary Education in Kisii County, KenyaDocument13 pagesInfluence of Principals’ Promotion of Professional Development of Teachers on Learners’ Academic Performance in Kenya Certificate of Secondary Education in Kisii County, KenyaInternational Journal of Innovative Science and Research Technology100% (1)

- An Efficient Cloud-Powered Bidding MarketplaceDocument5 pagesAn Efficient Cloud-Powered Bidding MarketplaceInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Auto Tix: Automated Bus Ticket SolutionDocument5 pagesAuto Tix: Automated Bus Ticket SolutionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Designing Cost-Effective SMS based Irrigation System using GSM ModuleDocument8 pagesDesigning Cost-Effective SMS based Irrigation System using GSM ModuleInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Digital Finance-Fintech and it’s Impact on Financial Inclusion in IndiaDocument10 pagesDigital Finance-Fintech and it’s Impact on Financial Inclusion in IndiaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Utilization of Waste Heat Emitted by the KilnDocument2 pagesUtilization of Waste Heat Emitted by the KilnInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Predictive Analytics for Motorcycle Theft Detection and RecoveryDocument5 pagesPredictive Analytics for Motorcycle Theft Detection and RecoveryInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- An Overview of Lung CancerDocument6 pagesAn Overview of Lung CancerInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Comparative Evaluation of Action of RISA and Sodium Hypochlorite on the Surface Roughness of Heat Treated Single Files, Hyflex EDM and One Curve- An Atomic Force Microscopic StudyDocument5 pagesComparative Evaluation of Action of RISA and Sodium Hypochlorite on the Surface Roughness of Heat Treated Single Files, Hyflex EDM and One Curve- An Atomic Force Microscopic StudyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Effect of Solid Waste Management on Socio-Economic Development of Urban Area: A Case of Kicukiro DistrictDocument13 pagesEffect of Solid Waste Management on Socio-Economic Development of Urban Area: A Case of Kicukiro DistrictInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Ambulance Booking SystemDocument7 pagesAmbulance Booking SystemInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Forensic Advantages and Disadvantages of Raman Spectroscopy Methods in Various Banknotes Analysis and The Observed Discordant ResultsDocument12 pagesForensic Advantages and Disadvantages of Raman Spectroscopy Methods in Various Banknotes Analysis and The Observed Discordant ResultsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Computer Vision Gestures Recognition System Using Centralized Cloud ServerDocument9 pagesComputer Vision Gestures Recognition System Using Centralized Cloud ServerInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- 7.total Station Basics For ArchDocument11 pages7.total Station Basics For ArchAlex Wilson100% (2)

- Operations Management Stevenson 12th Edition Test BankDocument40 pagesOperations Management Stevenson 12th Edition Test Bankmanuelhuynhv2zNo ratings yet

- Manual Ventsim Ingles Ver. 3.2Document270 pagesManual Ventsim Ingles Ver. 3.2Leslie FanyNo ratings yet

- Low-Cost, 16 & 20-Bit Measurement A/D Converter: CS5501 CS5503Document54 pagesLow-Cost, 16 & 20-Bit Measurement A/D Converter: CS5501 CS5503Сергей КолосовNo ratings yet

- Certification: Bill of MaterialDocument6 pagesCertification: Bill of MaterialBrett ThomasNo ratings yet

- Darathene Plus: PictureDocument2 pagesDarathene Plus: PictureRaj Aryan YadavNo ratings yet

- Class15 - Data WarehousingDocument76 pagesClass15 - Data Warehousingpkrsuresh2013No ratings yet

- Oral Com Week 3 4Document30 pagesOral Com Week 3 4Galang, Edglaiza Shairah JusonNo ratings yet

- Case Study 5: Microsoft - Increasing or Diminishing Returns?Document1 pageCase Study 5: Microsoft - Increasing or Diminishing Returns?Angel Gonda100% (1)

- AXIS Camera Station Solution Troubleshooting Guide: User ManualDocument18 pagesAXIS Camera Station Solution Troubleshooting Guide: User ManualCORAL ALONSONo ratings yet

- Proforma For College - Information Regarding Fee, Bond - Conditions EtcDocument2 pagesProforma For College - Information Regarding Fee, Bond - Conditions EtcSRUTHI K SETHUMADHAVANNo ratings yet

- DM-Intro 13.0 L-02 Introduction DMDocument19 pagesDM-Intro 13.0 L-02 Introduction DMUgur PalandokenNo ratings yet

- Wadd (DPS) - I Gusti Ngurah Rai IntlDocument30 pagesWadd (DPS) - I Gusti Ngurah Rai IntlGETA CHANNELNo ratings yet

- Chapter Five: Introduction To Active Microwave Devices and NetworksDocument38 pagesChapter Five: Introduction To Active Microwave Devices and Networksgetahun fentawNo ratings yet

- India: No.30/0/2018 Government The Director General Central Public Works DepartmentDocument53 pagesIndia: No.30/0/2018 Government The Director General Central Public Works DepartmentChief Engineer Western Zone-3No ratings yet

- Top 16 High Frequency Material Manufacturers For RF PCB DesignDocument11 pagesTop 16 High Frequency Material Manufacturers For RF PCB DesignjackNo ratings yet

- Project ProposalDocument5 pagesProject ProposalCSE 19015No ratings yet

- Canopen Application Layer and General Communication ProfileDocument1 pageCanopen Application Layer and General Communication ProfileJudin HNo ratings yet

- Barniz Dielctrico 3900 TdsDocument2 pagesBarniz Dielctrico 3900 Tdsoscar el carevergaNo ratings yet

- Chapter6-1 Part I 12-10-2003Document81 pagesChapter6-1 Part I 12-10-2003Blue StarNo ratings yet

- Dr. Babasaheb Ambedkar Technological University, Lonere, RaigadDocument1 pageDr. Babasaheb Ambedkar Technological University, Lonere, RaigadParesh PatilNo ratings yet

- HW09 SolutionsDocument8 pagesHW09 SolutionsFatah Imdul UmasugiNo ratings yet

- SNAP 2.0: Compact Folding Drone With HD CameraDocument8 pagesSNAP 2.0: Compact Folding Drone With HD CameraRobert ZeitmanNo ratings yet

- Final New Hospital Mangement System by Yash BhimanaiDocument55 pagesFinal New Hospital Mangement System by Yash BhimanaiYash Bhimani100% (1)

- M.Tech (MVD)Document2 pagesM.Tech (MVD)rishi tejuNo ratings yet

- Appointment RecieptDocument2 pagesAppointment RecieptHamechander ChanderNo ratings yet

- Bomag BW120 - enDocument126 pagesBomag BW120 - enJulio Castro100% (3)

- Lab Activity - 6 - Packet Tracer PracticalDocument6 pagesLab Activity - 6 - Packet Tracer PracticalShadman SifatNo ratings yet

- Purpose Launchpad Guide: The Manual On The Agile Framework and The MindsetDocument50 pagesPurpose Launchpad Guide: The Manual On The Agile Framework and The MindsetNuriaNo ratings yet

- CTM Machine-Global QuotationDocument5 pagesCTM Machine-Global QuotationGanesh Kumar TulabandulaNo ratings yet

- Scary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldFrom EverandScary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldRating: 4.5 out of 5 stars4.5/5 (55)

- Summary of Mustafa Suleyman's The Coming WaveFrom EverandSummary of Mustafa Suleyman's The Coming WaveRating: 5 out of 5 stars5/5 (1)

- Generative AI: The Insights You Need from Harvard Business ReviewFrom EverandGenerative AI: The Insights You Need from Harvard Business ReviewRating: 4.5 out of 5 stars4.5/5 (3)

- Working with AI: Real Stories of Human-Machine Collaboration (Management on the Cutting Edge)From EverandWorking with AI: Real Stories of Human-Machine Collaboration (Management on the Cutting Edge)Rating: 5 out of 5 stars5/5 (5)

- The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldFrom EverandThe Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldRating: 4.5 out of 5 stars4.5/5 (107)

- Your AI Survival Guide: Scraped Knees, Bruised Elbows, and Lessons Learned from Real-World AI DeploymentsFrom EverandYour AI Survival Guide: Scraped Knees, Bruised Elbows, and Lessons Learned from Real-World AI DeploymentsNo ratings yet

- Artificial Intelligence: A Guide for Thinking HumansFrom EverandArtificial Intelligence: A Guide for Thinking HumansRating: 4.5 out of 5 stars4.5/5 (30)

- ChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindFrom EverandChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindNo ratings yet

- ChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveFrom EverandChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveNo ratings yet

- The Business Case for AI: A Leader's Guide to AI Strategies, Best Practices & Real-World ApplicationsFrom EverandThe Business Case for AI: A Leader's Guide to AI Strategies, Best Practices & Real-World ApplicationsNo ratings yet

- The Roadmap to AI Mastery: A Guide to Building and Scaling ProjectsFrom EverandThe Roadmap to AI Mastery: A Guide to Building and Scaling ProjectsNo ratings yet

- Machine Learning: The Ultimate Beginner's Guide to Learn Machine Learning, Artificial Intelligence & Neural Networks Step by StepFrom EverandMachine Learning: The Ultimate Beginner's Guide to Learn Machine Learning, Artificial Intelligence & Neural Networks Step by StepRating: 4.5 out of 5 stars4.5/5 (19)

- The AI Advantage: How to Put the Artificial Intelligence Revolution to WorkFrom EverandThe AI Advantage: How to Put the Artificial Intelligence Revolution to WorkRating: 4 out of 5 stars4/5 (7)

- Artificial Intelligence: The Insights You Need from Harvard Business ReviewFrom EverandArtificial Intelligence: The Insights You Need from Harvard Business ReviewRating: 4.5 out of 5 stars4.5/5 (104)

- T-Minus AI: Humanity's Countdown to Artificial Intelligence and the New Pursuit of Global PowerFrom EverandT-Minus AI: Humanity's Countdown to Artificial Intelligence and the New Pursuit of Global PowerRating: 4 out of 5 stars4/5 (4)

- MidJourney Magnified: Crafting Visual Magic – The Novice to Pro PlaybookFrom EverandMidJourney Magnified: Crafting Visual Magic – The Novice to Pro PlaybookNo ratings yet

- ChatGPT Prompt Mastery: How To Leverage AI To Make Money OnlineFrom EverandChatGPT Prompt Mastery: How To Leverage AI To Make Money OnlineNo ratings yet

- Artificial Intelligence & Generative AI for Beginners: The Complete GuideFrom EverandArtificial Intelligence & Generative AI for Beginners: The Complete GuideRating: 5 out of 5 stars5/5 (1)

- Hyperautomation with Generative AI: Learn how Hyperautomation and Generative AI can help you transform your business and create new value (English Edition)From EverandHyperautomation with Generative AI: Learn how Hyperautomation and Generative AI can help you transform your business and create new value (English Edition)No ratings yet

- Mastering Large Language Models: Advanced techniques, applications, cutting-edge methods, and top LLMs (English Edition)From EverandMastering Large Language Models: Advanced techniques, applications, cutting-edge methods, and top LLMs (English Edition)No ratings yet

- Four Battlegrounds: Power in the Age of Artificial IntelligenceFrom EverandFour Battlegrounds: Power in the Age of Artificial IntelligenceRating: 5 out of 5 stars5/5 (5)

- System Design Interview: 300 Questions And Answers: Prepare And PassFrom EverandSystem Design Interview: 300 Questions And Answers: Prepare And PassNo ratings yet

- Creating Online Courses with ChatGPT | A Step-by-Step Guide with Prompt TemplatesFrom EverandCreating Online Courses with ChatGPT | A Step-by-Step Guide with Prompt TemplatesRating: 4 out of 5 stars4/5 (4)

- HBR's 10 Must Reads on AI, Analytics, and the New Machine AgeFrom EverandHBR's 10 Must Reads on AI, Analytics, and the New Machine AgeRating: 4.5 out of 5 stars4.5/5 (69)